哥哥﹐你還好嗎﹖

“阿飛正傳”仍然是自己最喜歡的一套戲﹐不論是情節﹑畫面﹑音樂等﹐都覺得配合得天衣無縫。是的﹐八年過去了﹐原來是真的﹐要記得的﹐永遠都會記得﹗

“阿飛正傳”仍然是自己最喜歡的一套戲﹐不論是情節﹑畫面﹑音樂等﹐都覺得配合得天衣無縫。是的﹐八年過去了﹐原來是真的﹐要記得的﹐永遠都會記得﹗

幾年前就曾經光顧過在銅鑼灣的Pasha老舖﹐現在它又在TST重生了﹐作為羊痴的我每次都肯定不會放過它那好吃的羊肉串。

Nice to try it when there is 50% off promotion going. ![]()

今天的球聚遇見了一位很特別的80後年輕人J。

首先他的先天機能(體格)比一般人強壯的多﹐全身上下散發著運動員的氣息。

傾談中得知原來一直有打籃球習慣的他因為屢次受傷﹐而且做過手術﹐所以最近選擇了網球這項比較安全的運動。在他老闆親自指導下(真羨慕現在還有這樣的好BOSS)打了近五個月的網球﹐平時幾乎每天有空就會在樓下的球場對著空場(不是練習牆)練習正反手抽擊和開球﹐回家有空就看YOUTBUE FYB和模仿學習其它PRO的打法和姿勢。

打了4局後﹐完全感受到了什麼是天生的運動細胞﹐人家五個月已經等于我五年的功力(當然不排除我網球先天資質方面比較遲鈍的可能性)。

謙虛的他令我很樂意地跟他分享我的經驗﹐尤其指出他的單反用了很多手腕力去打﹐這樣會很容易受傷﹐還有就是LATE HIT遲拉拍的問題。

自問不夠資格指導別人﹐但SHARING真的可以令人很快樂的﹐尤其看見聰明的他很快就能掌握我所提出的建議﹐目睹他的進步飛快更使我想在以後的球聚提供多些建議給他﹐令他可以儘快打得更好些﹐當然除了我﹐還希望各路好手有機會多多指導這位謙虛有潛能的年輕人。

相信在他不斷的努力和毅力下﹐日後肯定會是一個出類拔萃的網球好手﹐J加油啊﹗

Basically, there are 3 methods to get instant email alert via email by using VMware vCenter, Dell iDRAC and Dell IT Assistant (ITA) which I will focus the most, 2 of them are specific to Dell Poweredge Serer and ITA solution.

Method 1: How to get hardware failure alert with vCenter

This is the easiest but you do need to have vCenter, so it may not be a viable solution for those using free ESXi (there are scripts to get alert for free ESXi, but it’s not the content of today’s topic).

From the top of the hierarchy in vCenter, click Alarms, then New Alarm, give it a name say “Host Hardware Health Monitor”, in Triggers, Add, select “Hardware Health Changed” under Event and “Warning” for Status, Add another one with the same parameter except “Alert” for Status. Finally, for Actions, choose “Send a notification email” under Action and put your email address there.

Of course, you need to configure SMTP setting in vCenter Server Settings first.

Method 2: How to get hardware failure alert with Dell iDRAC

This is probably is even more simple than the above, but it does not report all of the hardware failure in ESX Host, so far I can say it doesn’t report harddisk failure which is very critical for many, so I would call this is a half working or a handicapped solution.

Login to iDRAC, under Alerts, setup Email Alerts and SMTP server, you will need to setup a SMTP server on your dedicated DRAC network to receive such alerts and forward those email alert to your main email server on external. Under Platform Events, you need to CHECK Enable Platform Events Filter Alerts and leave all the default as it is. As you have probably found out already and scratching your head now, how come Dell didn’t include Storage Wanring/Critical Assert Filter? For that question, you need to ask Michael Dell directly.

Btw, I am using iDRAC6, so not sure if your firmware contains such feature.

Method 3: How to get hardware failure alert with Dell IT Assistant (ITA)

This is actually today’s main topic I would like to focus on, it is the proper way to implement host alert via SNMP and SNMP Trap and it does provide a complete solution, but quite time-consuming and a bit difficult to setup. I tried to consolidate all the difficult part, eliminated all the unnecessary steps and use as much GUI as possible without going into CLI.

vicfg-snmp.pl –server <hostname> –username <username> –password <password> -t <target hostname>@<port>/<community>

For example, to send SNMP traps from the host esx_host_ip to port 162 on ita_ip using the ita_community_string, use the command:

vicfg-snmp.pl –server esx_host_ip –username root –password password -t ita_ip@162/ita_community_string

for multiple targets, use , to seperate the rest trap targets:

vicfg-snmp.pl –server esx_host_ip –username root –password password -t ita_ip@162/ita_community_string, ita_ip2@162/ita_community_string

To show and test if it’s working

vicfg-snmp.pl –server esx_host_ip –username root –password password — show

vicfg-snmp.pl –server esx_host_ip –username root –password password — test

Remember to enable UPD Port 162 on ITA server firewall. Simply treat ITA as a software device to receive SNMP Trap sent from various monitoring hosts.

Remember to enable UPD Port 162 on ITA server firewall. Simply treat ITA as a software device to receive SNMP Trap sent from various monitoring hosts.

Another thing is for Windows hosts to send out SNMP Trap, you will also need to go to SNMP Service under the Traps tab, configure the snmp trap ita_community_string and the IP address of the trap destination which should be the same as ita_ip.

So I did a test by pulling one of the Power Supply on ESX Host, and I get the following alert results in my inbox.

From ITA:

Device:sXXX ip address, Service Tag: XXXXXXX, Asset Tag:, Date:03/22/11, Time:23:18:38:000, Severity:Warning, Message:Name: System Board 1 PS Redundancy 0 Status: Redundancy

From iDRAC:

Message: iDRAC Alert (s002)

Event: PS 2 Status: Power Supply sensor for PS 2, input lost was deasserted

Date/Time: Tue Mar 22 2011 23:26:18

Severity: Normal

Model: PowerEdge RXXX

Service Tag: XXXXXXX

BIOS version: 2.1.15

Hostname: sXXX

OS Name: VMware ESX 4.1.0 build-XXXXXXXX

iDrac version: 1.54

From vCenter:

Target: xxx.xxx.xxx.xxx Previous Status: Gray New Status: Yellow Alarm Definition: ([Event alarm expression: Hardware Health Changed; Status = Yellow] OR [Event alarm expression: Hardware Health Changed; Status = Red]) Event details: Health of Power changed from green to red.

What’s More

Actually there is Method 4 which uses Veeam Monitor (free version) to send email, but I haven’t got time to check that out, if you know how to do it, please drop me a line, thanks.

Finally, I would strongly suggest Dell to implement a trigger that will send out email alert directly from OpenManage itself, it’s simple and works for most of the SMB ESX Host scenario that contains less than 10 hosts in general, you can say this is Method Number 5.

Update Mar-24:

I got ITA working for PowerConnect switch as well, so my PowerConnect can now send SNMP trap back to ITA and generate an email if there is warning/critical issue, it’s really simple to setup PowerConnect’s SNMP community and SNMP trap setting, and I start to like ITA now, glad I am not longer struggling with DMC 2.0.

Finally, there is a very good document about setting up SNMP and SNMP Traps from Dell.

Update Aug-24:

If you are only interested to know if any of your server harddisk failed, then you can install LSI Megaraid Storage Manager which has the build-in email alert capability.

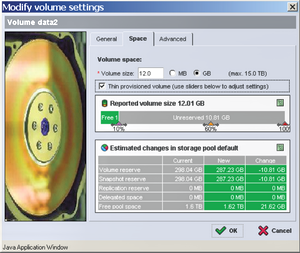

After several months of testing with real world loading, I would say the most optimized way to utilize your SAN storage is to enable Thin Provisioning at BOTH the Storage and Host.

One thing you need to constantly check is space will not grow over to 100%, you can do this by enable vCenter Alarm on space utilization and stay alerted, I’ve encountered one time that a VM suddenly went crazy and ate all the space it allocated, thus tops VMFS threshold as well as Equallogic threshold at the same time.

One thing you need to constantly check is space will not grow over to 100%, you can do this by enable vCenter Alarm on space utilization and stay alerted, I’ve encountered one time that a VM suddenly went crazy and ate all the space it allocated, thus tops VMFS threshold as well as Equallogic threshold at the same time.

This is the only down side you need to consider, but the trade off is minimum considering the benefit you get when using Thin Provisioning at BOTH Equallogic and ESX Level.

Of course, you should not put a VM that constantly need more space over the time into the same thin provisioned volume with others.

Finally, not to mention it’s been proved by VMware that the performance penalty for using Thin Provisioning is almost none (ie, identical to thick format) and it’s amazing using VMFS is even faster than RDM in many cases, but that’s really another topic “Should I or Should I NOT use RDM”.

* Note: One very interesting point I found that is when enabling Thin Provisioning on storage side, but use Thick format for VM, guess what? The storage utilization ONLY shows what’s actually used within that VM, ie, if the thick format VM is 20GB, but only 10GB is actually used, then on thin provisioned storage side, it will show ONLY 10GB is allocated, not 20GB.

This is simply fantastic and intelligent! However, this still doesn’t help to over allocate the VMFS space, so you will still need to enable Thin Provisioning in each individual VM.

Sometimes, you may want to convert the original Thick to Thin by using vMotion the Datastore, another great tool without any downtime, especially if your storage support VAAI, then this conversion process only takes a few minutes to complete.

今天看到的一段新聞﹐這樣的事情看來只會在中國大陸發生﹐可憐的小牛Gallardo和中國人。看見沒有﹐拿著傢伙的工人砸著的時候竟帶有詭異的笑容﹐令我不寒而慄﹐難道中國人真的有根深蒂固的“小農”基因﹖I am not sure what Jeremy of Top Gear would say if he ever came across this news. ![]()

据车主介绍,2010年11月29日,这辆购入不久的跑车,就在青岛出现了发动机不打火的故障。车主与该公司青岛销售店联系后,车由青岛销售店委托的维修服务商安排的拖车运至指定的维修店。没想到的是,运至维修店后,不但发动机故障没有得到解决,车辆保险杠和底盘也受损断裂。

经多次维权仍不能圆满解决,车主决定砸毁这辆兰博基尼。昨日下午3:15,在青岛一灯具城门口,车主雇人将这辆价值300万的兰博基尼盖拉多跑车,砸得面目全非。