Equallogic Firmware 5.0.2, MEM, VAAI and ESX Storage Hardware Accleration

Finally got this wonderful piece of Equallogic plugin working, the speed improvement is HUGE after intensive testing in IOmeter.

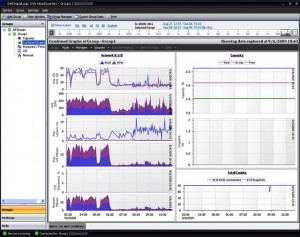

100% Sequential, Read and Write always top 400MB/sec, sometimes I see 450-460MB/sec for 10 mins for a single array box, then the PS6000XV box starts to complain about all its interfaces were being saturated.

For IOPS, 100% Random, Read and Write has no problem reaching 4,000-4,500 easily.

The other thing about this Equallogic’s MEM script is IT IS JUST TOO EASY to setup the whole iSCSI vSwitch/VMKernel with Jumbo Frame or Hardware iSCSI HBA!

There is NO MORE complex command lines such as esxcfg-vswitch, esxcfg-vmknic or esxcli swiscsi nic, life is as easy as a single command of setup.pl –config or –install, of course you need to get VMware vSphere Power CLI first.

Something worth to mention is the MPIO parameter that you can actually tune and play with.

C:\>setup.pl –setparam –name=volumesessions –value=12 –server=10.0.20.2

You must provide the username and password for the server.

Enter username: root

Enter password:

Setting parameter volumesessions = 12

Parameter Name Value Max Min Description

————– —– — — ———–

reconfig 240 600 60 Period in seconds between iSCSI session reconf

igurations.

upload 120 600 60 Period in seconds between routing table upload

.

totalsessions 512 1024 64 Max number of sessions per host.

volumesessions 12 12 3 Max number of sessions per volume.

membersessions 2 4 1 Max number of sessions per member per volume.

C:\>setup.pl –setparam –name=membersessions –value=4 –server=10.0.20.2

You must provide the username and password for the server.

Enter username: root

Enter password:

Setting parameter membersessions = 4

Parameter Name Value Max Min Description

————– —– — — ———–

reconfig 240 600 60 Period in seconds between iSCSI session reconf

igurations.

upload 120 600 60 Period in seconds between routing table upload

.

totalsessions 512 1024 64 Max number of sessions per host.

volumesessions 12 12 3 Max number of sessions per volume.

membersessions 4 4 1 Max number of sessions per member per volume.

Yes, why not getting it to its maximum volumesessions=12 and membersessions=4, each volume won’t spread across more than 3 array boxes anyway, and the new firmware 5.0.2 allows 1024 total sessions per pool, that’s way way more than enough. So say you have 20 volumes in a pool and 10 ESX hosts, each having 4 NICs for iSCSI, that’s still only 800 iSCSI connections.

Update Jan-21-2011

Do NOT over allocate membersessions to be greater than the available iSCSI NICs, I encountered a problem that allocating membersessions = 4 when I only have 2 NICs, high TCP-Retransmit starts to occur!

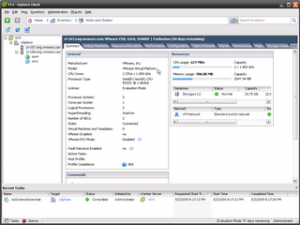

To checkup if Equallogic MEM has been installed correctly, issue

C:\>setup.pl –query –server=10.0.20.2

You must provide the username and password for the server.

Enter username: root

Enter password:

Found Dell EqualLogic Multipathing Extension Module installed: DELL-eql-mem-1.0.

0.130413

Default PSP for EqualLogic devices is DELL_PSP_EQL_ROUTED.

Active PSP for naa.6090a078c06ba23424c914a0f1889d68 is DELL_PSP_EQL_ROUTED.

Active PSP for naa.6090a078c06b72405fc9b4a0f1880d96 is DELL_PSP_EQL_ROUTED.

Active PSP for naa.6090a078c06b722496c9c4a2f1888d0e is DELL_PSP_EQL_ROUTED.

Found the following VMkernel ports bound for use by iSCSI multipathing: vmk2 vmk3 vmk4 vmk5

One word to summarize the whole thing: “FANTASTC” !

More about VAAI from EQL FW5.0.2 Release Note:

Support for vStorage APIs for Array Integration

Beginning with version 5.0, the PS Series Array Firmware supports VMware vStorage APIs for Array Integration (VAAI) for VMware vSphere 4.1 and later. The following new ESX functions are supported:

•Hardware Assisted Locking – Provides an alternative means of protecting VMFS cluster file system metadata, improving the scalability of large ESX environments sharing datastores.

•Block Zeroing – Enables storage arrays to zero out a large number of blocks, speeding provisioning of virtual machines.

•Full Copy – Enables storage arrays to make full copies of data without requiring the ESX Server to read and write the data.

VAAI provides hardware acceleration for datastores and virtual machines residing on array storage, improving performance with the following:

•Creating snapshots, backups, and clones of virtual machines

•Using Storage vMotion to move virtual machines from one datastore to another without storage I/O

•Data throughput for applications residing on virtual machines using array storage

•Simultaneously powering on many virtual machines

•Refer to the VMware documentation for more information about vStorage and VAAI features.

Update Aug-29-2011

I noticed there is a minor update for MEM (Apr-2011), the latest version is v1.0.1. Since I do not have such error and as a rule of thumb if there is nothing happen, then don’t update, so I won’t update MEM for the moment.

Finally, I wonder if MEM will work with vSphere 5.0 as the released note saying “The EqualLogic MEM V1.0.1 supports vSphere ESX/ESXi v4.1″.

Issue Corrected in This Release: Incorrect Determination that a Valid Path is Down

Under rare conditions when certain types of transient SCSI errors occur, the EqualLogic MEM may incorrectly determine that a valid path is down. With this maintenance release, the MEM will continue to try to use the path until the VMware multipathing infrastructure determines the path is permanently dead .

1. Use Veeam’s great free tool FastSCP to upload the original.vmdk file (nothing else, just that single vmdk file is enough, not even vmx or any other associated files) to /vmfs/san/import/original.vmdk. In case you didn’t know, Veeam FastSCP is way faster than the old WinSCP or accessing VMFS from VI Client.

1. Use Veeam’s great free tool FastSCP to upload the original.vmdk file (nothing else, just that single vmdk file is enough, not even vmx or any other associated files) to /vmfs/san/import/original.vmdk. In case you didn’t know, Veeam FastSCP is way faster than the old WinSCP or accessing VMFS from VI Client.

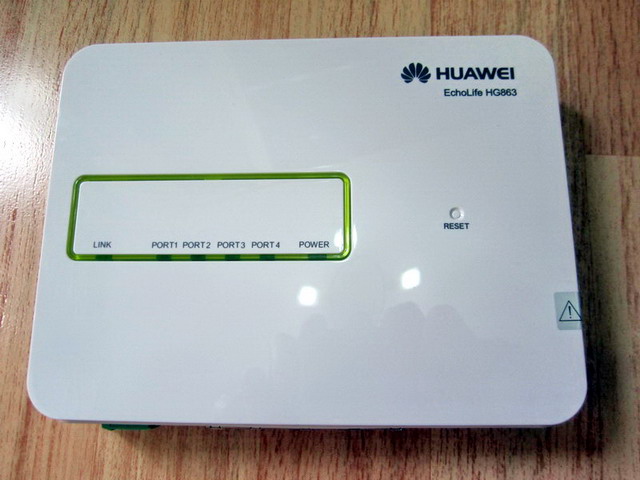

As we found the EQL I/O testing performance is low, only 1 path activated under 2 paths MPIO and disk latency is particular high during write for the newly configured array.

As we found the EQL I/O testing performance is low, only 1 path activated under 2 paths MPIO and disk latency is particular high during write for the newly configured array. For the whole month, my mind is full of VMWare, ESX 4.1, Equallogic, MPIO, SANHQ, iSCSI, VMKernel, Broadcom BACS, Jumbo Frame, IOPS, LAG, VLAN, TOE, RSS, LSO, Thin Provisioning, Veeam, Vizioncore, Windows Server 2008 R2, etc.

For the whole month, my mind is full of VMWare, ESX 4.1, Equallogic, MPIO, SANHQ, iSCSI, VMKernel, Broadcom BACS, Jumbo Frame, IOPS, LAG, VLAN, TOE, RSS, LSO, Thin Provisioning, Veeam, Vizioncore, Windows Server 2008 R2, etc.

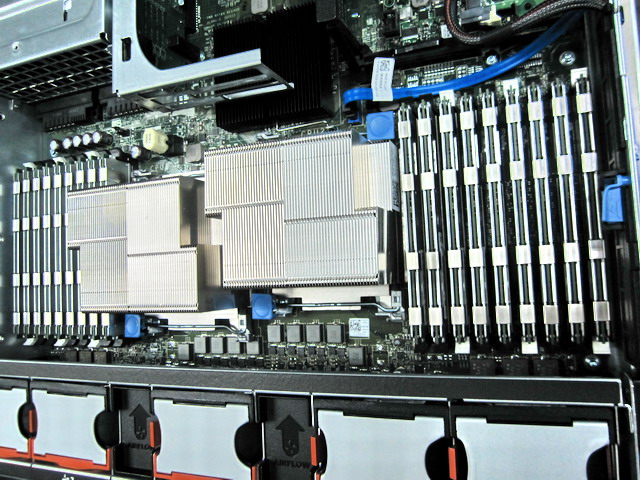

What about 3DPC? Old story applies, it’s 800Mhz, tested it and proved it and if populate with 3DPC and fully filled that 18 DIMMs (ie, 144GB), it will take twice the time to verify and boot the server, so it’s better not to as you lost 40% bandwidth is very important for ESX.

What about 3DPC? Old story applies, it’s 800Mhz, tested it and proved it and if populate with 3DPC and fully filled that 18 DIMMs (ie, 144GB), it will take twice the time to verify and boot the server, so it’s better not to as you lost 40% bandwidth is very important for ESX.