VMware finally has to face the truth: Sales Record is going Down and competitor Microsoft is chasing like a dog, almost onto its foot.

It’s a great start this week’s VMWorld 2012 by announcing the cancellation of vTax from VMW’s new CEO Pat Gelsinger and even the exiting CEO Paul Martiz said the vRam thing was indeed TOO COMPLICATED to implement.

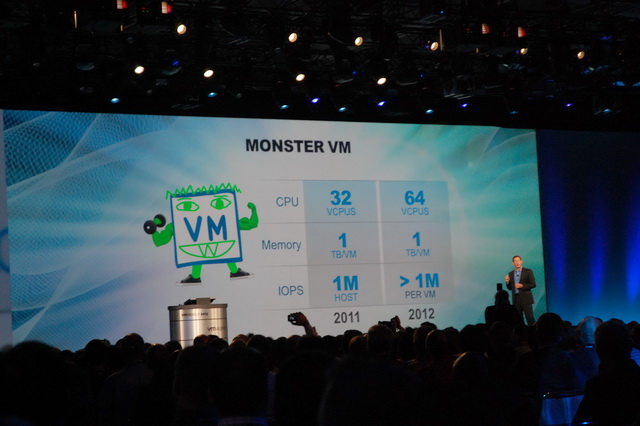

So I am sure we will see 1TB/2TB ESX host to become a norm pretty soon and not to mention the cost per VM will be greatly driving down and become much more competitive than Hyper-V or XEN.

Finally, I can’t wait to attend the latest VMware conference in Hong Kong and experience those exciting new features from ESX 5.1!

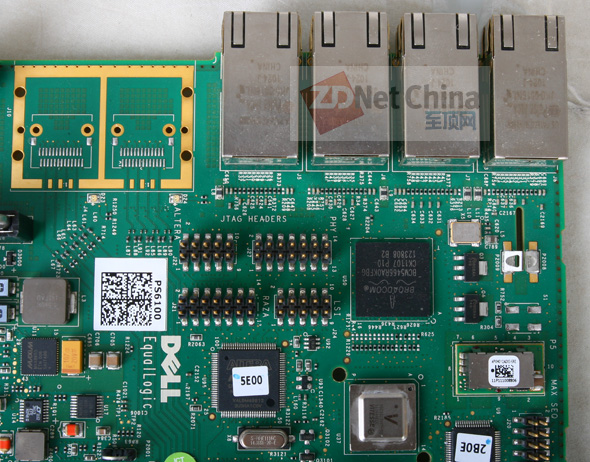

PS. Congratulations to Equallogic, they have won The Finalist of the Category New Technology in VMworld 2012 Awards.

VMware vRAM still in place for VSPPs

VMware isn’t completely “striking the word vRAM from the vocabulary of the vDictionary,” as CEO Pat Gelsinger said at VMworld. The controversial pricing model will still apply to those running vSphere in public clouds.

Participants in the VMware Service Provider Program (VSPP) will continue to charge according to the amount of memory allocated per virtual machine (VM). Some service providers said they have no major problems with vRAM licensing and pricing because it aligns well with the public cloud’s pay-per-use business model.

“We’re fairly happy with where we are right now,” said Pat O’Day, chief technology officer of VSPP partner BlueLock LLC.

But a West Coast-based VSPP partner said VMware vRAM can put VSPPs at a disadvantage against other service providers, especially when it comes to high-density hosts.

“We’re seeing a lot of large service providers and industry players moving off of VMware and into open source … and that’s putting competitive pressure on us,” said the partner, who spoke on the condition of anonymity.

How VMware vRAM works for VSPPs

The VMware vRAM allocation limits for VSPPs are different from those abolished at VMworld, which were for business customers purchasing vSphere. For VSPPs, vRAM is capped at 24 GB of reserved memory per VM, and VSPPs are charged for at least 50% of the memory they reserve for each VM.

Also, unlike with the licenses for private organizations, VSPP vRAM is not associated with a CPU-based entitlement, and it does not require the purchase of additional licenses to accommodate memory pool size limits.

The infrastructure for BlueLock Virtual Datacenters runs on Hewlett-Packard servers, packing in 512 GB of RAM each. VMware licensing is a very small percentage of BlueLock’s overall costs, O’Day said. Given the 24 GB per VM cap, the company could probably add even more RAM to each server, O’Day said.

“But that’s not worth disrupting our business model to do,” he added.

VSPPs’ VMware vRAM reactions

VMware discontinued the wildly unpopular vRAM licensing and pricing model for business customers after more than a year of discontent. Some VSPPs worried the move away from vRAM on the enterprise side would also affect their businesses, according to an email one VSPP software distributor sent to service provider clients.

“We have received feedback from quite a few partners who have expressed concern to us over the announcement that VMware is moving away from vRAM,” the distributor said in the email, which was obtained by SearchCloudComputing.com. “The question to a person seems to be, ‘How does this impact me as a VSPP partner whose billing is completely based around vRAM?’ The answer is, not at all.”

But the West Coast partner, who received that email, said the cost of memory on high-density hosts can eat into VSPPs’ margins or force VSPPs to pass the costs on to end-user customers. This partner offers services including hosted virtual desktops that don’t run on VMware View, and vRAM can cost as much as $7 per gigabyte under this model.

“If you’re trying to provision a desktop with four gigabytes of RAM … the memory alone is $30,” the partner said. “You’re at a significant disadvantage as the market tries to bring the price down.”

Furthermore, the complexity of the licensing model makes it difficult to set the monthly costs of vRAM based on usage, the partner said.

“You basically hand VMware your wallet and let them take whatever they want,” the partner added. “… The pricing is so complicated, we have no way to estimate how much we’re going to pay VMware at the end of the month.”

In a blog post discussing the continuation of vRAM for VSPPs, VMware said the vRAM model allows service providers to sell more computing capability from the same infrastructure, and to control the memory oversubscription and service profit margin through allocated vRAM delivered to customers.

VMware vRAM timeline

July 2011: VRAM licensing and pricing model announced at vSphere 5 launch.

Aug. 2011: VMware increases vRAM limits in response to complaints.

Aug. 2012: At VMworld, VMware eliminates vRAM licensing and pricing for business customers.

![]()