Battles Between the Two: Veeam Backup & Replication and Acronis vmProtect

I wouldn’t say it’s professional to be exact, but somehow their words between the lines did reveal the truth.

Btw, I love both products and using both in my client’s environment, both has its advantage and disadvantage. IMOP, I would say Veeam is the leader in virtual world and Acronis continues to be the leader in physical or within each vm as it allows more granular restore requirements.

The whole flight is that Anton was pissed about Sergey mentioning their own method of running VM from backup image directly via NFS and not to say a word about this feature was in fact invented by Veeam in the first place. (ie, Copycat from Acronis)

At the end it seemed to be Anton from Veeam vs Sergey from Acronis, fight start, Round 1, KO! ![]()

Sergey Kandaurov wrote:

In vmProtect we offer an alternative solution which can effectively replicate replication in most scenarios: a virtual machine can be started directly from a compressed and deduped backup. It only takes several seconds, sometimes up to a minute to create virtual NFS share, mount it to ESXi host, register and power on the VM.

Anton Respond:

You only forgot to mention that this feature was originally invented by Veeam, has been available as a part of Veeam Backup & Replication for 1 year now, and is patent-pending (as you are well aware). While it is a smart thing to copy the leader (not for long though), I think you should at least be fair to the inventor, and should have referenced us (in this topic about Veeam), instead of making it look like something you guys have unique to replace your missing replication with.

Nevertheless, back to your replication comment, I must mention that you seem to completely lack any understanding of when and how replication is used in disaster recovery. Replicas are to be used when your production VMware environment goes down, which means you cannot even run your appliance (vmProtect can only run on VMware infrastructure, and does not support being installed on standalone physical server, am I correct)? Also, even if your appliance is somehow magically able to work after VMware environment or production storage disaster, I would love to see it running a few dozens of site’s VMs through NFS server, the disk I/O performance of those VMs, and how they will be meeting their SLAs. You clearly still have a lot to learn about replication and production environments, if you are positioning vPower as an alternative to replication.

I would have never replied to you, because I generally avoid vendor battles on public forums, just like I avoid advertizing Veeam products on these forums (instead, I only respond to specific questions, comments or remarks about Veeam). With thousands of daily visitors on Veeam’s own forums, I have a place to talk about my stuff. But this one looked so very special, I just could not pass it. First, clearly your only intent and sole purpose of registering on these forums a few days ago was to advertize your solution (which is NOT the purpose of these forums). Not a good start already, however, it would be understandable if you created the new topic. But instead, you have chosen the topic where OP is asking about the two very specific solutions (assume you found it by searching for Veeam), and crashed the party with blatant advertizement paired with pure marketing claims having nothing in common with reality. And that was

really hard to let go, I am sorry.

Why, instead, you have decided to go with 100% copycat of Veeam’s patent-pending, virtualization-specific archicture, which is nowhere near what you have patented? No need to answer, I perfectly realize that this was because your patented approach simply would not work with virtualization, as in-guest logic is not virtualization aware – so things like Storage VMotion (which is essential to finalize VM recovery), would not produce the desired results if you went that route.

![51a[1] 51a[1]](http://www.modelcar.hk/wp-content/uploads/2011/09/51a1.jpg) Does it make sense to use “not so reliable” MLC SSD for enterprise storage? Well, it really depends on your situation. Hitachi is the

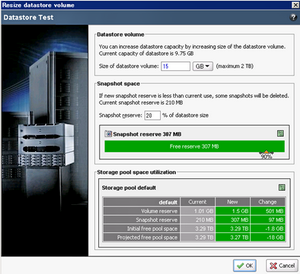

Does it make sense to use “not so reliable” MLC SSD for enterprise storage? Well, it really depends on your situation. Hitachi is the  The first release of HIT/VE (Version 3.0.1) was back in April 2011, it did make storage administrator’s life a lot easier particularly if you are managing hundreds of volumes. Well, to be honest, I don’t mind creating volumes, assigning them to ESX host and setting the ACL manually, it only takes a few minutes more. I feel that I need to know every step is correctly carried out which is more important because I can control everything, obviously, I don’t have a huge SAN farm to manage, so HIT/VE is not a life saver tool for me.

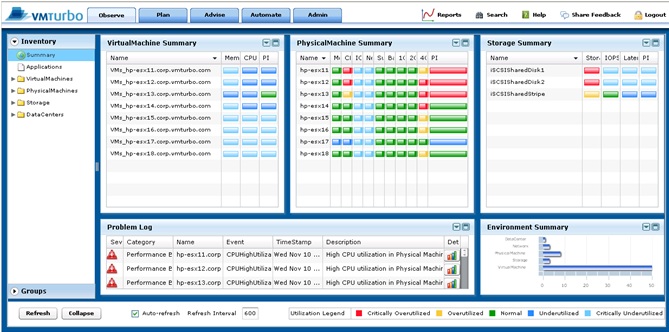

The first release of HIT/VE (Version 3.0.1) was back in April 2011, it did make storage administrator’s life a lot easier particularly if you are managing hundreds of volumes. Well, to be honest, I don’t mind creating volumes, assigning them to ESX host and setting the ACL manually, it only takes a few minutes more. I feel that I need to know every step is correctly carried out which is more important because I can control everything, obviously, I don’t have a huge SAN farm to manage, so HIT/VE is not a life saver tool for me. If you have already got Veeam’s Monitor (Free Version), then this is the one that you shouldn’t omit. It’s a compliment to Veeam monitoring tool where you can use VMTurbo Community Version (Monitor+Reporter) to see those details are only available for the Veeam paid version. Furthermore, it clearly displays a lot of very useful information such as IOPS breakdown of individual VMs.

If you have already got Veeam’s Monitor (Free Version), then this is the one that you shouldn’t omit. It’s a compliment to Veeam monitoring tool where you can use VMTurbo Community Version (Monitor+Reporter) to see those details are only available for the Veeam paid version. Furthermore, it clearly displays a lot of very useful information such as IOPS breakdown of individual VMs.![dellstorage082211[1] dellstorage082211[1]](http://www.modelcar.hk/wp-content/uploads/2011/08/dellstorage0822111.png)

![nutanix_appliannce_architecture[1] nutanix_appliannce_architecture[1]](http://www.modelcar.hk/wp-content/uploads/2011/08/nutanix_appliannce_architecture1.jpg)