It’s not offical, but after comparing the results, I would still say Equallogic ROCKS!

Finally, I wonder why there aren’t many results from Lefthand, NetApp, 3PAR and HDS?

My own result:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

TABLE oF RESULTS

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

SERVER TYPE: VM on ESX 4.1 with EQL MEM Plugin

CPU TYPE / NUMBER: vCPU / 1

HOST TYPE: Dell PE R710, 96GB RAM; 2 x XEON 5650, 2,66 GHz, 12 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Equallogic PS6000XV x 1 (15K), / 14+2 600GB Disks / RAID10 / 500GB Volume, 1MB Block Size

SAN TYPE / HBAs : ESX Software iSCSI, Broadcom 5709C TOE+iSCSI Offload NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..5.4673……….10223.32………319.48

RealLife-60%Rand-65%Read……15.2581……….3614.63………28.24

Max Throughput-50%Read……….6.4908……….4431.42………138.48

Random-8k-70%Read……………..15.6961……….3510.34………27.42

EXCEPTIONS: CPU Util. 83.56, 47.25, 88.56, 44.21%;

##################################################################################

Compares with other Equallogic user’s result:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

TABLE OF RESULTS

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

SERVER TYPE: VM ON ESX 3.0.1

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: Dell PE6850, 16GB RAM; 4x XEON 7020, 2,66 GHz, DC

STORAGE TYPE / DISK NUMBER / RAID LEVEL: EQL PS3600 x 1 / 14+2 SAS10k / R50

SAN TYPE / HBAs : iSCSI, QLA4050 HBA

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..__17______……….___3551___………___111____

RealLife-60%Rand-65%Read……___21_____……….___2550___………____20____

Max Throughput-50%Read……….____10____……….___5803___………___181____

Random-8k-70%Read……………..____23____……….___2410___………____19____

EXCEPTIONS: VCPU Util. 60-46-75-46 %;

##################################################################################

SERVER TYPE: VM.

CPU TYPE / NUMBER: VCPU / 1 ) JUMBO FRAMES, MPIO RR

HOST TYPE: Dell PE2950, 32GB RAM; 2x XEON 5440, 2,83 GHz, DC

STORAGE TYPE / DISK NUMBER / RAID LEVEL:EQL PS5000 x 1 / 14+2 Disks (sata)/ R5

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..____9,6___……….____5093___………___159,00_

RealLife-60%Rand-65%Read……____26,6___……….___1678___………___13,11__

Max Throughput-50%Read………._____8,5__……….____4454___………___139,20_

Random-8k-70%Read…………….._____31,3_……….____1483___………___11,58____

EXCEPTIONS: CPU Util.-XX%;

##################################################################################

SERVER TYPE: PHYS

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: DL380 G3, 4GB RAM; 2X XEON 3.20 GHZ

STORAGE TYPE / DISK NUMBER / RAID LEVEL: PS6000XV / 14+2 DISK (15K SAS) / R10)

NOTES: 2 NIC, MS iSCSI, no-jumbo, flowcontrol on

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..___13.60____……….___3788____………___118____

RealLife-60%Rand-65%Read…….___14.87____……….___3729____………___29.14__

Max Throughput-50%Read………___12.75____……….___4529____………___141____

Random-8k-70%Read…………..___15.42____……….___3580____………___27.97__

EXCEPTIONS: CPU Util.-XX%;

##################################################################################

SERVER TYPE: PHYS

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: DL380 G3, 4GB RAM; 2X XEON 3.20 GHZ

STORAGE TYPE / DISK NUMBER / RAID LEVEL: PS6000XV / 14+2 DISK (15K SAS) / R50)

NOTES: 2 NIC, MS iSCSI, no-jumbo, flowcontrol off, ntfs aligned w/ 64k alloc, mpio-rr

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..____9.84____……….___5677____………___177_____

RealLife-60%Rand-65%Read…….___13.20____……….___3712____………___29.00___

Max Throughput-50%Read………____8.39____……….___6742____………___211_____

Random-8k-70%Read…………..___13.91____……….___3783____………___29.55___

EXCEPTIONS: CPU Util.-XX%;

##################################################################################

SERVER TYPE: VM windows 2008 enterprise.

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: Dell PE2950, 32GB RAM; 2x XEON 5450, 3,00 GHz

STORAGE TYPE / DISK NUMBER / RAID LEVEL: EQL PS5000E x 1 / 14+2 Disks / R10 / MTU: 9000

####################################################################

TEST NAME——————-Av. Resp. Time ms—-Av. IOs/sek—-Av. MB/sek—–AV. CPU Utl.

Max Throughput-100%Read………….16,3……………..3638,3…………..113,7……………..35………

RealLife-60%Rand-65%Read………21,7………………2237,8…………….17,5……………..43………

Max Throughput-50%Read…………..17,7……………….2200,6…………….67,8……………..80………

Random-8k-70%Read………………….23,6………………2098,4…………….16,3……………..41………

####################################################################

SERVER TYPE: database server

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: Dell PowerEdge M600, 2*X5460, 32GB RAM.

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Equallogic PS5000E / 14*500GB SATA in RAID10

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek—

##################################################################################

Max Throughput-100%Read……___10.29____……._5694__………_177.94___

RealLife-60%Rand-65%Read…..___31.75____…….__1382__………__10.80___

Max Throughput-50%Read…….___10.51____…….__5664__………_177.02___

Random-8k-70%Read…………___34.34____…….__1345__………__10.51___

EXCEPTIONS: CPU Util. 20% – 15% – 10% – 13%;

####################################################################

SERVER TYPE: VM, VMDK DISK

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: DELL R610, 16GB RAM; 2 x Intel E5540, QuadCore

STORAGE TYPE / DISK NUMBER / RAID LEVEL: EqualLogic PS5000XV / 14+2 DISK (15k SAS) / R50)

NOTES: 3 NIC, modified ESX PSP RR IOPS parameter, jumbo on, flowcontrol on

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..__6,48__……….__9178,56__………_286,83__

RealLife-60%Rand-65%Read…….__13,08__……….__3301,94__………__25,8__

Max Throughput-50%Read………__9,06__……….__6160,2__………__192,51__

Random-8k-70%Read…………..__13,59__……….__3215,69__………__25,12__

##################################################################

SERVER TYPE: Windows XP VM w/ 1GB RAM on ESXi 4

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: Sun SunFire x4150, 48GB RAM; 2x XEON E5450, 2.992 GHz

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Two EQL PS6000E’s with / 14+2 SATA Disks / R50

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read….….15.025.……….…3915.89……..…..122.37

RealLife-60%Rand-65%Read……12.20..…..…..….3324.92.…..…….25.97

Max Throughput-50%Read….……13.18..………….4460.97….…..….139.40

Random-8k-70%Read….….….…..13.40….………..3033.14….…..…..23.69

EXCEPTIONS: CPU%= 44 – 66 – 40 – 63

Using iscsi w/ software initiator. 4 nics, each with a vmkernel assigned to it.

##################################################################################

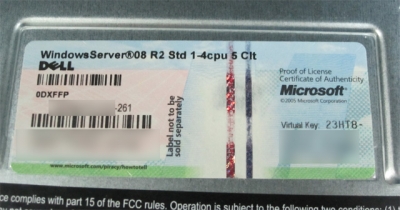

Server Type: VM Windows Server 2008 R2 x64 Std. on VMware ESXi 4.1

CPU Type / Number: vCPU / 1

VM Hardware Version 7

Two vmxnet3 NICs (10 GBit) used for iSCSI Connection (10 GB LUN directly connected to VM, no VMFS/RDM)

MS iSCSI Initiator (integrated in 2008 R2)

SAN Type: EQL PS6000XV (14+2 SAS HDDs, 15K, RAID 50)

Switches: Dell PowerConnect 6224

ESX Host is equipped with four 1GBit NICs (only for iSCSI connection)

Jumbo Frames and Flow Control enabled.

##################################################################################

Test——————-Av. Resp. Time ms——Total IOs/sek——-Total MB/sek——

##################################################################################

Max Throughput-100%Read……..___10.1929_____…….___4967.06_____…..____155.22______

RealLife-60%Rand-65%Read……_____12.6970____…..____3933.39____…..____30.73______

Max Throughput-50%Read………____9.5941____…..____5115.05____…..____159.85______

Random-8k-70%Read…………..____12.9845_____…..____4030.60______…..____31.49______

##################################################################################

SERVER TYPE: Dell NX3100

CPU TYPE / NUMBER: Intel 5620 x2 24GB RAM

HOST TYPE: Server 2008 64bit

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Equallogic PS4000XV-600 14 * 600GB 15K SAS @ R50

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read 7163 223 8.3

RealLife-60%Rand-65%Read 4516 35 11.4

Max Throughput-50%Read 6901 215 8.4

Random-8k-70%Read 4415 34 11.9

##################################################################################

SERVER TYPE: W2K8 32bit on ESXi 4.1 Build 320137 1vCPU 2GB RAMCPU TYPE / NUMBER: Intel X5670 @ 2.93GhzHOST TYPE: Dell PE R610 w/ Broadcom 5709 Dual Port w/ EQL MPIO PSP EnabledNETWORK: Dell PC 6248 Stack w/ Jumbo Frames 9216STORAGE TYPE / DISK NUMBER / RAID LEVEL: EQL PS4000X 16 Disk Raid 50

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read 8.12 7410 231 29%

RealLife-60%Rand-65%Read 10.65 3347 26 59%

Max Throughput-50%Read 7.19 7861 245 34%

Random-8k-70%Read 11.37 3387 26 55%

##################################################################################

Also compares with other major iSCSI/FC SAN Vendors:

SERVER TYPE: VM ON ESX 3.5 U3

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: HP DL380 G5, 24GB RAM; 4x XEON 5410(Quad), 2,33 GHz,

STORAGE TYPE / DISK NUMBER / RAID LEVEL: EMC CX4-120 / 4+1 / R5 / 14+1total disks

SAN TYPE / HBAs : 4GB FC HP StorageWorks FC1142SR (Qlogic)

MetaLUNS are configured with 200GB LUNs striped accross all 14 disks for total datastore size of 600GB

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……………__6______……….___9320___………___291____

RealLife-60%Rand-65%Read……___24_____……….__1638___………____13____

Max Throughput-50%Read…………….____5____……….___11057___………___345____

Random-8k-70%Read……………..____23____……….___1800___………____14____

####################################################################

SERVER TYPE: VM on ESX 3.5.0 Update 4

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: HP Proliant DL385C G5, 32GB RAM; 2x AMD 2,4 GHz Quad-Core

SAN Type: HP EVA 4400 / Disks: 4GB FC 172GB 15k / RAID LEVEL: Raid5 / 38+2 Disks / Fiber 8Gbit FC HBA

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read…….______5___……….___10690__……..___334____

RealLife-60%Rand-65%Read……______8___……….____5398__……..____42____

Max Throughput-50%Read…….._____49___……….____1452__……..____45____

Random-8k-70%Read………….______9___……….____5390__……..____42____

EXCEPTIONS: NTFS 32k Blocksize

##################################################################################

SERVER TYPE: VM WIN2008 64bit SP2 / ESX 4.0 ON Dell MD3000i via PC 5424

CPU TYPE / NUMBER: VCPU / 2 )JUMBO FRAMES, MPIO RR

HOST TYPE: Dell R610, 16GB RAM; 2x XEON 5540, 2,5 GHz, QC

ISCSI: VMWare iSCSI software initiator , Onboard Broadcom 5709 with TOE+ISCSI

STORAGE TYPE / DISK NUMBER / RAID LEVEL:Dell MD3000i x 1 / 6 Disks (15K 146GB / R10)

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..____14,55___……….____4133__………____128,48____

RealLife-60%Rand-65%Read……_____22,69_………._____2085__………____16,92____

Max Throughput-50%Read………._____14,13___………._____4289__………____134,04____

Random-8k-70%Read…………….._____21,7__………._____2272__………____17,75___

##################################################################################

####################################################################

SERVER TYPE: Windows Server 2003r2 x32 VM with LSI Logic controller, ESX 4.0

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: HP BL490c G6, 64GB RAM; 2x XEON E5540, 2,53 GHz, QC

STORAGE TYPE / DISK NUMBER / RAID LEVEL: HP EVA6400 / 23 Disks / RAID5

Test name Avg resp time Avg IO/s Avg MB/s Avg % cpu

Max Throughput-100%Read 5.5 10831 338.47 38

RealLife-60%Rand-65%Read 10.8 4313 33.70 45

Max Throughput-50%Read 31.6 1822 56.95 17

Random-8k-70%Read 9.9 4613 36.04 47

SERVER TYPE: Windows Server 2008 x64 VM with LSI Logic SAS controller, ESX 4.0

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: HP BL490c G6, 64GB RAM; 2x XEON E5540, 2,53 GHz, QC

STORAGE TYPE / DISK NUMBER / RAID LEVEL: HP EVA6400 / 48 Disks / RAID10

Test name Avg resp time Avg IO/s Avg MB/s Avg % cpu

Max Throughput-100%Read 5.51 10905 340.8 32

RealLife-60%Rand-65%Read 8.20 6366 49.7 39

Max Throughput-50%Read 9.31 5279 165 43

Random-8k-70%Read 7.81 6734 52.6 39

####################################################################

SERVER TYPE: VM ON VI4

CPU TYPE / NUMBER: VCPU / 1

HOST TYPE: Supermicro , 64GB RAM; 4x XEON , E5430 2,66 GHz, QC

STORAGE TYPE / DISK NUMBER / RAID LEVEL: SUN 7410 11×1tb + 18gb ssd write + 100gb ssd read

##################################################################################

SAN TYPE / HBAs : 1gb NIC NFS

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

Max Throughput-100%Read……..__17______……….___3421___………___106____

RealLife-60%Rand-65%Read……___6_____……….___7771___………____60____

Max Throughput-50%Read……….____11____……….___5321___………___166____

Random-8k-70%Read……………..____6____……….___2662___………____60____

##################################################################################

SERVER TYPE: VM Windows 2003, 1GB RAM

CPU TYPE / NUMBER: 1 VCPU

HOST TYPE: IBM x3650 M2, 34GB RAM, 2x X5550, 2,66 GHz QC

STORAGE TYPE / DISK NUMBER / RAID LEVEL: IBM DS3400 (1024MB CACHE/Dual Cntr) 11x SAS 15k 300GB / R6 + EXP3000 (12x SAS 15k 300GB) for the tests

SAN TYPE / HBAs : FC, QLA2432 HBA

##################################################################################

RAID10- 10HDDs ——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read_______5,8_______________9941_______310

RealLife-60%Rand-65%Read_____16,7______________3083_________24

Max Throughput-50%Read________12,6______________4731________147

Random-8k-70%Read___________15,5______________3201________25

##################################################################################

####################################################################

SERVER TYPE: 2008 R2 VM ON ESX 4.0 U1

CPU TYPE / NUMBER: VCPU / 1 / 2GB Ram

HOST TYPE: HP BL460 G6, 32GB RAM; XEON X5520

STORAGE TYPE / DISK NUMBER / RAID LEVEL: EMC CX4-240 / 3x 300GB 15K FC / RAID 5

SAN TYPE / HBAs: 8Gb Fiber Channel

Test Name Avg. Response Time Avg. I/O per Second Avg. MB per Second CPU Utilization

Max Throughput – 100% Read 5.03 12,029.33 375.92 21.87

Real Life – 60% Rand / 65% Read 42.81 1,074.93 8.39 19.57

Max Throughput – 50% Read 3.63 16,444.30 513.88 29.67

Random 8K – 70% Read 51.44 1,039.38 8.12 14.01

SERVER TYPE: 2003 R2 VM ON ESX 4.0 U1

CPU TYPE / NUMBER: VCPU / 1 / 1GB Ram

HOST TYPE: HP DL360 G6, 24GB RAM; XEON X5540

STORAGE TYPE / DISK NUMBER / RAID LEVEL: LeftHand P4300 x 1 / 7 +1 Raid 5 10K SAS Drives

SAN TYPE / HBAs: iSCSI, SWISCSI, 2x 82571EB GB Eth Port Nics, One connection on each MPIO enabled – Jumbo Frames Enabled – 4 iSCSI connections to Volume – 1x Hp Procurve Switch

Test Name Avg. Response Time Avg. I/O per Second Avg. MB per Second CPU Utilization

Max Throughput – 100% Read 13.94 4289.95 134.06 22.17

Real Life – 60% Rand / 65% Read 18.95 1952.18 15.25 54.70

Max Throughput – 50% Read 41.95 1284.81 40.13 27.41

Random 8K – 70% Read 15.56 2132.71 16.66 60.32

####################################################################

�

SERVER TYPE: VMWare ESX 4u1

GUEST OS / CPU / RAM Win2K3 SP2, 2 VCPU, 2GB

HOST TYPE: DELL R610, 32GB RAM, 2 x Intel E5520, 2.27GHz, QuadCore

STORAGE TYPE / DISK NUMBER / RAID LEVEL: PILLAR DATA AX500 180 drives 525GB SATA, RAID5

SAN TYPE / HBAs : FCOE CNA EMULEX LP21002C on NEXUS 5010

####################################################################

TEST NAME———-Av.Resp.Time ms—Av.IOs/se—Av.MB/sek——

##################################################################

Max Throughput-100%Read….5.1609……….11275……… 362.86 CPU=22.84%

RealLife-60%Rand-65%Read…3.2424……… 17037…….. 131.68 CPU=32.6%

Max Throughput-50%Read……4.2503 ………12742 …….. 403.35 CPU=26.45%

Random-8k-70%Read………….3.2759……….16824………128.19 CPU=30.39%##################################################################

SERVER TYPE: ESXi 4.10 / Windows Server 2008 R2 x64, 2 vCPU, 4GB RAMCPU TYPE / NUMBER: Intel Xeon X5670 @ 2.93GHzHOST TYPE: HP ProLiant BL460c G7STORAGE TYPE / DISK NUMBER / RAID LEVEL: NetApp FAS6280 Metrocluster, FlashCache / 80 Disks / RAID DP

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read 4.07 11562 361 63%

RealLife-60%Rand-65%Read 1.67 22901 178 1%

Max Throughput-50%Read 3.93 11684 365 61%

Random-8k-70%Read 1.45 25509 199 1%

##################################################################

SERVER TYPE: HP Proliant DL360 G7

CPU TYPE / NUMBER: Intel Xeon 5660 @2.8 (2 Processors)

HOST TYPE: Server 2008R2, 4vCPU, 12GB RAM

STORAGE TYPE / DISK NUMBER / RAID LEVEL: HP P4500 SAN, 24 600GB 15K in NETRAID 10. 4 Paths to Virtual iSCSI IP, RoundRobin host IOPS policy set to 1 Jumbo Frames Enabled Netflow Enabled

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read 8.45 7119 222 22%

RealLife-60%Rand-65%Read 15.68 2423 18 55%

Max Throughput-50%Read 9.75 6000 187 25%

Random-8k-70%Read 11.71 2918 22 61%

##################################################################

EMC VNX5500, 200gb fast cache 4×100 efd raid1)

Pool of 25×300gb 15k disks

Cisco UCS blades

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read—— 16068 — 502 —– 1.71

RealLife-60%Rand-65%Read—– 3498 —- 27 —– 10.95

Max Throughput-50%Read——– 12697 —- 198 —- 0.885

Random-8k-70%Read—————- 4145 —– 32.38 — 8.635

##################################################################