Around this time December last year, I’ve been searching for a reliable, cost effective SSD for vSphere ESX environment as the one from Dell is prohibitory expensive to implement.

Besides, Dell SSDs may have poor performance problem as someone described in VMTN, those 1st generation SSD either provided by Sumsung OEM (100GB and 200GB), or Pliant based (149GB) which is much faster than Sumsung ones and of course much more expensive (over priced that is) as well. Both are e-MLC NAND.

Anyway, I’ve finally purchased a Crucial M4 SSD 128GB 2.5″ SATA 3 6Gb/s (around USD200 with 3 years warranty), here is for a list of reasons.

Then my goal is to put this SSD to Dell Poweredge R710 2.5″ tray and see how amazing it’s going to be. I know it’s not a supported solution, but no harm to try.

The Perc H700/H800 Technical Book specifically states it supports 3Gb/s if it’s a SATA SSD, but I found out this is not true, read on.

1. First thing first, I have Upgraded Crucial firmware from 0009 to 0309 as in early Jan 2012, users found out Crucial M4 SSD has a 5200 Hours BSOD problem, it’s still better than Intel SSD’s 8MB huge bug.

Correct a condition where an incorrect response to a SMART counter will cause the m4 drive to become unresponsive after 5184 hours of Power-on time. The drive will recover after a power cycle, however, this failure will repeat once per hour after reaching this point. The condition will allow the end user to successfully update firmware, and poses no risk to user or system data stored on the drive.

Something more to notice that SandForce controller based SSD has a weakness that when more and more data is stored in SSD, it’s performance will decrease gradually. Crucial M4 is based on Marvell 88SS9174 controller and it doesn’t have this kind of problem. It is more stable and the speed is consistent even with 100% full in data.

In additional, Crucial M4 Garbage Collection runs automatically at the drive level when it is idle and it has Garbage Collection which works automatically in the background in the same way as TRIM independent of the running OS. As TRIM is an OS related command, so TRIM will not be used if there is no support in the OS (ie, VMware ESX).

2. The most difficult part is actually finding the 2.5″ tray for Poweredge R710 as Dell do not sell those separately, luckily I was able to get two of them off the auction site locally quite cheap and later found out they might be Counterfeit parts, but they worked 100% fine, only the color is a bit lighter than the original ones.

3. Then the next obvious thing is to insert the M4 SSD to R710 and hopefully Perc H700 will recognize this drive immediately. Unfortunately, the first run failed miserably with both drive indicator lights OFF, as if there is no drive in the 2.5″ tray.

Check the OpenManage log, found out the drive is not Certified by Dell (Huh?) and Blocked by Perc H700 right away.

Status: Non-Critical 2359 Mon Dec 5 18:37:24 2011 Storage Service A non-Dell supplied disk drive has been detected: Physical Disk 1:7 Controller 0, Connector 1

Status: Non-Critical 2049 Mon Dec 5 18:38:00 2011 Storage Service Physical disk removed: Physical Disk 1:7 Controller 0, Connector 1

Status: Non-Critical 2131 Mon Dec 5 19:44:18 2011 Storage Service The current firmware version 12.3.0-0032 is older than the required firmware version 12.10.1-0001 for a controller of model 0×1F17: Controller 0 (PERC H700 Integrated)

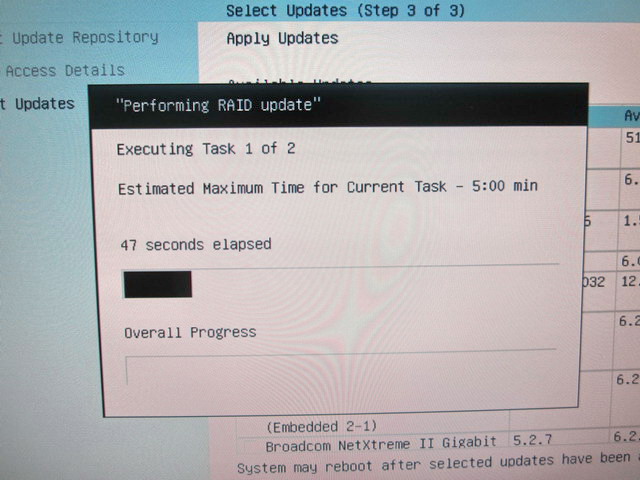

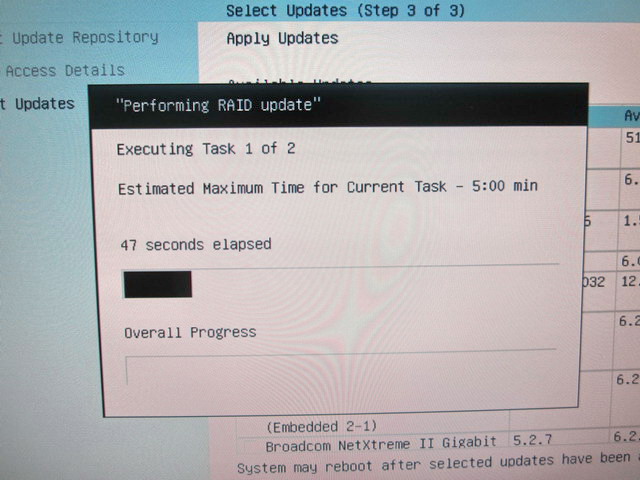

4. Then I found out the reason is older H700 firmware blocked the non Dell drive access, so I have to updated Perc H700 firmware to latest (v12.10.x) using USC again. Before the upgrade, I boot into H700’s ROM and found indeed the SSD drive is not presented in the dirve pool. Anyway, the whole process took about 15 minutes to complete, not bad.

5. After the server returns to normal, the Crucial M4 128GB SSD now has light showing in the tray indicator and working correctly partly, as the indicator on the top always blinking in amber (ie, orange), “Not Certified by Dell” indicates in OpenManage log, and this caused the r710 front panel LCD also blinking in amber.

Besides, under Host Hardware Health in vCenter, there is one error message showing “Storage Drive 7: Drive Slot sensor for Storage, drive fault was asserted”

From Perc H700 log file:

Status: OK 2334 Mon Dec 5 19:44:38 2011 Storage Service Controller event log:

Inserted: PD 07(e0xff/s7): Controller 0 (PERC H700 Integrated)

Status: Non-Critical 2335 Mon Dec 5 19:44:38 2011 Storage Service Controller

event log: PD 07(e0xff/s7) is not a certified drive: Controller 0 (PERC H700 Integrated)

I clear the log in OpenManage turns the front panel LCD returns to blue, but SSD drive top indicator light still blinks in amber, don’t worry, it’s just indicator showing it’s a non-dell drive.

Later, this was confirmed by a message in VMTN as well.

The issue is that these drives do not have the Dell firmware on them to properly communicate with the Perc Controllers. The controllers are not getting the messages they are expecting from these drives and thus throws the error.

You really won’t get around this issue until Dell releases support for these drives and at this time there does not appear to be any move towards doing this.

I was able to clear all the logs under Server Administrator. The individual lights on the drives still blink amber but the main bevel panel blue. The bevel panel will go back to amber again after a reboot but clearing the logs will put it back to blue again. Minor annoyance for great performance.

Update: If you have OM version 8.5.0 or above, now you can disable the not a certified drive warning completely! Strange that Dell finally listen to their customers after years of complain.

In C:\Program Files\Dell\SysMgt\sm\stsvc.ini update the parameter to NonDellCertifiedFlag=no

6. The next most important thing is to do a VMFS ReScan, ESX 4.1 found this SSD immediately Yeah! and I added it to the Storage section for testing.

Then I tested this SSD with IOMeter, Wow…man! This SINGLE little drive blows our PS6000XV (14 x 15K RPM RAID10) away, 7,140 IOPS for real life 100% random, 65% read test, almost TWICE than PS6000XV!!! ABSOLUTELY SHOCKING!!!

What does this mean is A Single M4 = 28 x 15K RPM RAID10, absolutely crazy numbers!

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……1.4239………39832.88………1244.78

Max Throughput-100%Write……1.4772………37766.44………1180.20

RealLife-60%Rand-65%Read……8.1674………7140.76………55.79

EXCEPTIONS: CPU Util. 93.96%, 94.08, 30.26%

################################################################

So why would I spend 1,000 times more when I can get the result with a single SSD drive for under USD200? (later proved I was wrong as if you sustain the I/O process, Equallogic will stay at 3,500 IOPS and SSD will drop to 1/10 of it’s starting value)

Oh…one final good thing is Crucial M4 SATA SSD is recognized as 6Gbps device in H700, as mentioned in the very first lines, according to Perc H700 tech book, it said H700 SSD SATA interface only supports up to 3Gbps, I don’t know if it’s the latest Perc H700 firmware or actually the M4 SSD itself somehow breaks that limit.

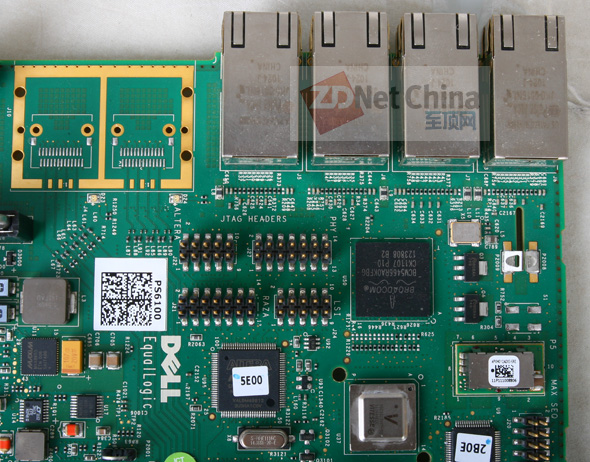

Let’s talk something more about Perc H700 itself, most people know Dell’s Raid controller cards are LSI Megaraid OEM since Poweredge 2550 (the fifth generation) and Dell Perc H700 shares many advanced feature with its LSI Megaraid ones.

Such as CacheCade, FastPath, SSD Guard, but ONLY available in the Perc H700 1GB Cache NV Ram version.

Optimum Controller Settings for CacheCade – SSD Caching

Write Policy: Write Back

IO Policy: Cached IO

Read Policy: No Read Ahead

Stripe Size: 64 KB

Cut-Through IO = Fast Path Cut-through IO (CTIO) is an IO accelerator for SSD arrays that boosts the throughput of devices connected to the PERCController. It is enabled through disabling the write-back cache (enable write-through cache) and disabling Read Ahead.

So this means you can use LSI Megaraid Storage Manager to control your Perc H700 or H800. In my case, I found my H700 does not support any of the above as it’s only a 512MB cache version. However “SSD Caching = Enable” shows in the controller property under LSI Megaraid Storage Manager and cannot be turned off as there is no such option, I am not sure what this is (definitely it’s not CacheCade), if you know what this is, please let me know.

Then let’s move into something deeper regarding Perc H700’s bandwidth as I found the card itself can reach almost 2GB/s, this is again too good to believe!

The PERC H700 Integrated card with two x4 internal mini-SAS ports supports the PCIe 2.0 x8 PCIe host interface on the riser.

PERC H700 is x8 PCIe 2.0 (bandwidth is 500MB/s per x1 lane) with TWO SAS 2.0 (6Gbps) Ports with x4 lane, so total bandwidth for EACH lane is 500MB/s x 4 = 2,000MB/s (ie, 2GB/s).

EACH SATA III or SAS 2.0 bandwidth is 6Gbps, this means EACH drive maximum speed can produce 750MB/s (if there is such SAS drive), so it will take about SIXTEEN (16) 6Gbps 15K rpm disks (each about 120MB/s) in reality to saturate ONE PERC H700’s 2GB/s theoretical bandwidth.

A single Crucial SSD M4 is able to go over 1GB/s in both Read and Write really shocked me!

This means two consumer grade Crucial SSD M4 in RAID0 should be enough to saturate Perc H700’s total 2GB/s bandwidth easily.

From ESX Storage Performance Chart, it also shows the consistent IOPS with IOMeter’s result. (ie, over 35,000 in Seq. Read/Write).

From Veeam Monitor, showing 1.28GB/s Read and 1.23GB Write

In fact, not just me, in reality, I found out many people were able to achieve this maximum 1,600MB or 1.6GB/s. (yes, theoretical is 2GB/s) with two or more SSD under Perc H700.

Of course the newer PCIe 3.0 standard is 1GB/s per x1 line, so a x4 will give you 4GB/s,a 200% increase, hopefully someone will do a benchmark on Poweredge R720 with its Perc H710 shortly.

Some will say using a single SSD is not safe, OK, then let’s make it a RAID1, if not RAID10 or RAID50 with 8 SSD drives, with the newer Poweredge R720, you can put maximum 14 SSD to create a RAID10/RAID50/RAID60 with 2 hot-spare in a 2U, more than enough right?

The most important is the COST IS MUCH MUCH LOWER when using consumer grade SSD and it’s not hard to imagine 14 SSD in RAID10 will produce some incredible IOPS, I guess something in 50,000 to 100,000 should be able to achieve without much problem. So why use those sky high $$$ Fusion IO PCI-e cards forks?

Finally, I also did a few desktop benchmark from within the VM.

Atto:

HD Tune:

Conclusion, for a low cost consumer SSD, 100% Random RealLife-60%Rand-65%Read with 32K transfer request size, 7,000+ IOPS is simply amazing!!!