Dell Poweredge 12G Server: R720 Sneak Preview

It seemed to me that Dell is already using e-MLC SSD (enterprise MLC) on its latest Poweredge G11 series servers.

100GB Solid State Drive SATA Value MLC 3G 2.5in HotPlug Drive,3.5in HYB CARR-Limited Warranty Only [USD $1,007]

200GB Solid State Drive SATA Value MLC 3G 2.5in HotPlug Drive,3.5in HYB CARR-Limited Warranty Only [USD $1,807]

I think Poweredge R720 will be probably released in the end of March. I don’t see the point using more cores as CPU is always the last resources being running out, but RAM in fact is the number one most important thing, so having more cores or faster Ghz almost means nothing to most of the ESX admin. Hum…also if VMWare can allow ESX 5.0 Enterprise Plus to have more than 256G per processor then it’s a real change.

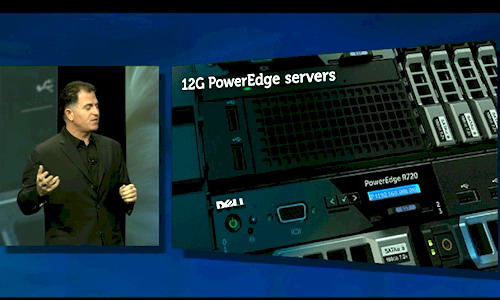

Michael Dell dreams about future PowerEdge R720 servers at OpenWorld

Dell, the man, said that Dell, the company, would launch its 12th generation of PowerEdge servers during the first quarter, as soon as Intel gets its “Sandy Bridge-EP” Xeon E5 processors out the door. Dell wasn’t giving away a lot when he said that future Intel chips would have lots of bandwidth. as readers of El Reg know from way back in may, when we divulged the feeds and speeds of the Xeon E5 processors and their related “Patsburg” C600 chipset, that bandwidth is due to the integrated PCI-Express 3.0 peripheral controllers, LAN-on-motherboard adapters running at 10 Gigabit Ethernet speeds, and up to 24 memory slots in a two-socket configuration supporting up to 384GB using 16GB DDR3 memory sticks running at up to 1.6GHz.

But according to Dell, that’s not the end of it. he said that Dell would be integrating “tier 0 storage right into the server,” which is server speak for front-ending external storage arrays with flash storage that is located in the server and making them work together seamlessly. “You can’t get any closer to the CPU,” Dell said.

Former storage partner and rival EMC would no doubt agree, since it was showing off the beta of its own “Project Lightning” server flash cache yesterday at OpenWorld. The idea, which has no doubt occurred to Dell, too, is to put flash cache inside of servers but put it under control of external disk arrays. this way, the disk arrays, which are smart about data access, can push frequently used data into the server flash cache and not require the operating system or databases to be tweaked to support the cache. It makes cache look like disk, but it is on the other side of the wire and inside the server.

Dell said that the new PowerEdge 12G systems, presumably with EqualLogic external storage, would be able to process Oracle database queries 60 times faster than earlier PowerEdge 11G models.

The other secret sauce that Dell is going to bring to bear to boost Oracle database processing, hinted Dell, was the system clustering technologies it got by buying RNA Networks back in June.

RNA Networks was founded in 2006 by Ranjit Pandit and Jason Gross, who led the database clustering project at SilverStorm Technologies (which was eaten by QLogic) and who also worked on the InfiniBand interconnect and the Pentium 4 chip while at Intel. The company gathered up $14m in venture funding and came out of stealth in February 2009 with a shared global memory networking product called RNAMessenger that links multiple server nodes together deeper down in the iron than Oracle RAC clusters do, but not as deep as the NUMA and SMP clustering done by server chipsets.

Dell said that a rack of these new PowerEdge systems – the picture above shows a PowerEdge R720, which would be a two-socket rack server using the eight-core Xeon E5 processors – would have 1,024 cores (that would be 64 servers in a 42U rack). 40TB of main memory (that’s 640GB per server), over 40TB of flash, and would do queries 60 times faster than a rack of PowerEdge 11G servers available today. presumably these machines also have EqualLogic external storage taking control of the integrated tier 0 flash in the PowerEdge 12G servers.

Update: March 6, 2012

Got some update from The Register regarding the coming 12G servers, one of the most interesting feature is R720 now supports SSD housing directly to PCI-Express slots.

Every server maker is flash-happy these days, and solid-state memory is a big component of any modern server, including the PowerEdge 12G boxes. The new servers are expected to come with Express Flash – the memory modules that plug directly into PCI-Express slots on the server without going through a controller. Depending on the server model, the 12G machines will offer two or four of these Express Flash ports, and a PCI slot will apparently be able to handle up to four of these units, according to Payne. On early tests, PowerEdge 12G machines with Express Flash were able to crank through 10.5 times more SQL database transactions per second than earlier 11G machines without flash.

Update: March 21, 2012

Seems the SSD housing directly to PCI-Express slots is going to be external and hot-swappable.

固態硬碟廠商Micron日前推出首款採PCIe介面的2.5吋固態硬碟(Solid State Disk,SSD),不同於市面上的PCIe介面SSD產品,這項新產品的最大不同之處在於,它並不是介面卡,而是可支援熱插拔(Hot- Swappable)的2.5吋固態硬碟。

近年來伺服器產品也開始搭載SSD硬碟,但傳輸介面仍以SATA或SAS為主,或是提供PCIe介面的擴充槽,讓企業可額外選購PCIe介面卡形式的 SSD,來擴充伺服器原有的儲存效能與空間。PCIe介面能提供更高效能的傳輸速率,但缺點是,PCIe擴充槽多設於伺服器的機箱內部,且不支援熱插拔功 能,企業如有擴充或更換需求,必須將伺服器停機,並掀開機箱外殼,才能更換。

目前Dell PowerEdge最新第12代伺服器產品新增了PCIe介面的2.5吋硬碟槽,而此次Micron所推出的PCIe介面2.5吋SSD,可安裝於這款伺 服器的前端,支援熱插拔功能,除保有高速傳輸效率的優點之外,也增加了企業管理上的可用性,IT人員可更輕易的擴充與更換。

![VENDORS[1] VENDORS[1]](http://www.modelcar.hk/wp-content/uploads/2011/12/VENDORS1.jpg)