收藏車模剛好4年多一點了,從最初只喜歡現代超級跑車,到後來漸漸喜歡上了濃郁的六七十年代經典歐洲汽車文化,當然令我最吃驚的是前不久竟然還是”栽”在了美國肌肉的坑裡。

談到六十年代歐洲汽車文化,英國汽車工業肯定功不可沒,當年更是”人才”輩出,其中比較多人認識的有Rolls-Royce Phantom V,Aston Martin DB5,Jaguar XJ13、E-Type,Jensen Interceptor, Mini等。

其實在六十年代中期,彼岸的美利堅合眾國已經開始興起了肌肉熱潮如Pontiac GTO和Ford Mustang等,同期英國本土也醞釀出國際知名的樂隊The Beatles。

但這些文化潮流的巨變還沒根本動搖英倫頂端豪華轎車的根基,Rolls-Royce、Bentley和Daimler這三大巨頭似乎沒把這些新興的事物和潮流放在眼裡,依然我行我素,繼續大英帝國Lord Car的設計風格,後來竟然還挺多了20年之久,厲害!

就Bentley來說,我認為直至2003年推出的Continenal GT才叫真正的開竅,大膽的設計革新,把英國豪車的夕陽不振一掃而空,換來前所未有的成功以及巨大的財富效應!

當然這裡指的是商業頭腦上的變通,雖然錢的確是賺多了好些,但品牌卻越做越低,為了融合大眾的要求,一些違反品牌歷史的事或者設計也不斷涌現。這不僅發生在賓利,還有法拉利、林寶、捷豹等。

當初那種與眾不同的雲端感覺漸漸逝我們而去,剩下的只有乏味沒靈魂的軀殼。其實這點台灣的CELSIOR兄講得尤其精闢,他那篇”從Lamborghini談談品牌精髓”的文章完全道出此重點所在。

現在天朝遍地豪車,但是就是覺得欠缺了什麼,就如很多中國富豪跑去倫敦,指明買Knightsbridge的大宅一定要包括所有傢具和擺設,甚至連人家祖宗的油畫和老太婆的蘇格蘭折耳貓也要一併買下,這代表了什麼﹖淺而易見,”文化”兩字不言而喻,因為他要的正是這種百年的古舊氣味和看不到的歷史感覺。

上個月,Minichamps推出了黑色的1954年Bentley R Type Continental和2012年版本的淺藍色Continental GT,但標價居然超過2千港幣,開什麼玩笑﹖講白了,玩具而已,車模廠還當真了,以為同樣的材料但冠以不同品牌的汽車就可以賣貴2倍。�

上星期我在車模店看見過Bentley R Type的做工,可以說粗糙得很,內飾完全跟以前的迷你切賓利出品相差一大截﹔Continental GT就更別說了,只加了個側窗就身價倍增,現在我才不會上當呢! 雖然我以前逢Bentley必買,但當現在了解了這種不誠實的商業行為後,就開始感到厭倦了,討厭這種肆無忌憚打著名牌搶錢的經營理念。

朋友J是我最初的車模啟蒙老師,就是他帶我進入了1比18的世界,也是他帶我第一次去香港的名車博覽並認識了MCE的老闆,讓我從此歡天喜地的甘心”栽”進了坑。其實J原本是太太的舊同事,LP現在應該很後悔介紹了這位朋友給我,哈哈。。。

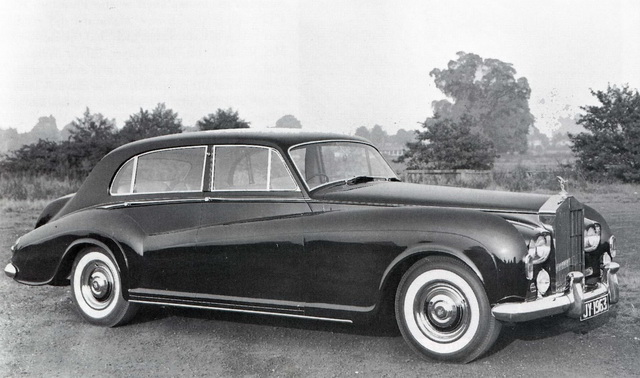

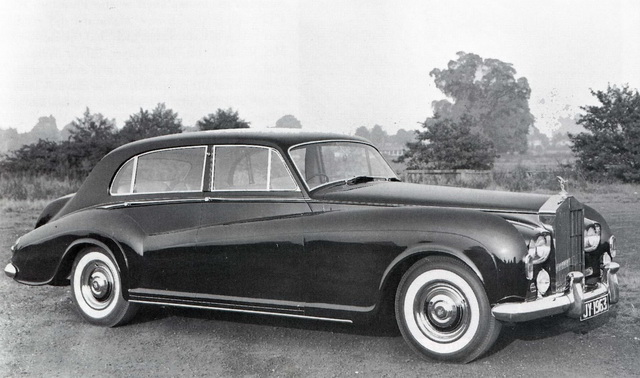

這台荷蘭Neo模型所推出的Bentley S3 Mulliner Park Ward就是朋友J所藏,原來他買多了一台自己當時還不知道,這種情況最近在本地不同的收藏家遇見了兩次,乃標準車痴的行為。

後來J擺在本地拍賣場放售已久,看來懂得欣賞的人不多,我也差不多已經遺忘了。直至兩個星期前,Neo推出了1比18的Bentley S3 Mulliner Park Ward版本我才突然想起我好像還沒有這台精緻的賓利(自己認為比Truescale Miniatures的做工還要出色)。查了一下,Neo的網站顯示Out of Stock,國際拍賣網此車也炒貴了不少,另外開篷的版本不太好看,還是硬頂的漂亮。其實那獨特的”怒目”開始很看不貫,但經典的東西就是越看越喜歡,逐漸愛上了這台特別由Mulliner Park Ward設計的Bentley S3。

馬上打電話給J,迅速完成交易,幾乎半買半送的價錢令我高興了好一陣子。見面的時候,朋友J同感車模廠現在只集中在高端,車模店老闆叫苦連天。其實沒了大眾,尤其是中層的購買力,這條路似乎必死無疑。

之後翻查歷史,原來在1960年代的Bentley或者是Rolls Royce,車身+內飾和引擎+底座是分開來購買的。

引擎+底座由原廠Bentley或者是Rolls Royce鑄造

車身+內飾則由Mulliner Park Ward和James Young兩大Coachbuilder (車身製造廠) 設計和裝配。

1/43 NEO Bentley SIII Mulliner Park Ward