Dell has released the updated version of its iSCSI flagship Equallogic PS6100/PS4100 series today. Why do I say it’s an updated version instead of a complete new generation? It’s because how Equallogic naming convention after their products, previously there are PS50, PS100, PS3500, PS5000, PS6000, if it’s a new generation, then it would be PS7000 series. After going through different documents, release notes, blog articles, I confirmed my guess is right. Best of all, you can mix and match any generation of Equallogic box in your SAN, I simple love this!

![dellstorage082211[1] dellstorage082211[1]](http://www.modelcar.hk/wp-content/uploads/2011/08/dellstorage0822111.png)

The followings are some of the major changes:

1. 4GB Cache vs 2GB Cache, probably won’t do much in terms of IOPS performance gain, I knew there are other vendors like EMC/Hitachi products using hugh cache up to 32GB/64GB, but real data shows the amount of cache isn’t the decision factor for IOPS.

2. The look of the new PS6100/PS4100: front and back looks almost identical to Dell’s SMB storage Powervault series, especially the back. It seemed to me that Dell has finally decided to use the same OEM hardware to save cost since the acquisition of Equallogic back in 2007. Especially, I don’t like the cheap look of the controller module on the back, it’s too Powervault MD1200/MD1220/MD3200 alike and the design of the new PS6100/PS4100 drive tray looks really ugly to me. Well, I am a person with design background, so I have a particular requirement for the Look! “Look is Everything!” once said by Agassi!

3. Dell claimed there is 60% more IOPS with PS6100, well, it’s kind of misleading as there are 24 drives in PS6100 vs 16 drives in PS6000. Simple math shows the extra 8 drives is only 50% more spindle if not counting the spare drivers. Also it’s interesting that if you use 24 x 2.5″ 10K drives on PS6100X, the IOPS performance is probably going to be similar to 16 x 3.5″ 15K drives PS6000XV. Of course if there is major upgrade in PS6100 controller hardware, then the story is different, just like PS5000XV and PS6000XV, controller module hardware is vastly different, the performance is also different even with the same number of spindles and disk RPM.

4. All PS6100 models has reduced its size from 3U to 2U with 24 x 2.5″ 15K/10K drives, it does save some rack space and power (probably not as there are more drives per 2U). Except for PS6100XV 3.5″ model which has been increased from 3U in PS6000XV to 4U, that extra 1U is for the additional 8 drives.

5. IMOP, the best products is PS6100XS which really combines the Tier 0 (7 400GB SSD) with Tier 1 (17 x 15K drives), this is the one I will purchase in our next upgrade cycle.

6. There is a Dedicated Management port on all PS6100XV, it’s a plus for some deployment scenarios.

7. On the VMware side, Dell said EqualLogic’s firmware 5.1 has “thin provisioning awareness” for VMware vSphere. As a result, the integration can save on recovery time and manage data loss risks. Dell has focused on VMware first given that 90 percent of its storage customers use virtualization and 80 percent of those customers are on VMware.

Over the third and fourth quarters, Dell and VMware will launch:

• EqualLogic Host Integration Tools for VMware 3.1. (Great!)

• Compellent storage replication adapters for VMware’s vCenter Site Recovery Manager 5.

• And Dell PowerVault integration with vSphere 5. (Huh? PV is becoming the low cost solution VMware SAN for 2-4 hosts deployment)

Finally, I wonder what does “Vertical port failover” mean in the PS6100 product PDF?

In fact, as usual I am more interested to know the hardware architecture of the new PS6100 series. One thing for sure PS6100 storage processor is 4-cores vs 2-cores in PS4100.

Can someone post a photo of the controller module please? What chipset does it use now?

Luckily, Jonathan of virtualizationbuster solved part of the mystery, thank you very much!

-SAS 6GB Backplane – Finally. That’s all I can say. Will we see a big difference? With 24 drives SAS 6GB + SSD’s SAS 6GB is definitely needed. There have been independent review sites of other storage hardware showing that we are getting pretty close to maxing SAS 6GB out, especially with 24 SSD’s….I will be talking to the engineering team at EQL about the numbers on this in the future, I am curious.

-Cache to Flash Controllers – Bye-Bye battery backed cache, hello Flash Memory Based controllers. All I have to say is it’s about time! (this goes for ALL storage vendors- -hello? Its 2011!). Note*- In my review of the Dell R510, the PERC H700 was refreshed in the beginning of the year to support 1GB NV Cache, which is similar to what EQL using, and it rocks!

-2U Form Factor – Well, you can’t beat consolidation right? The more space we save in a rack the better + more drives in each array = more performance per U, per rack, per aisle. I have been a HUGE proponent of the 2.5in drive form factor for many reasons outside the room for this post. I have been running 2.5in server drives from Dell for about 4 years now and they have been rock solid.

-24 Drives vs 16 Drives- Well, you can’t beat more spindles, not much to say here but if you are a shop that thinks they NEED 15k drives you really need to take a look at the 2.5in 10k drives, their performance is steller, yes its slightly slower than its 15 3.5in counterpart, but performance is pretty close, especially with 24 of these.

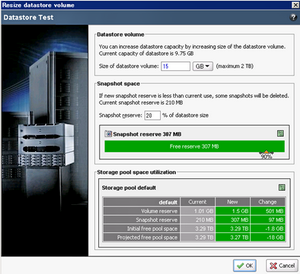

![51a[1] 51a[1]](http://www.modelcar.hk/wp-content/uploads/2011/09/51a1.jpg) Does it make sense to use “not so reliable” MLC SSD for enterprise storage? Well, it really depends on your situation. Hitachi is the first vendor launching eMLC SSD, I am sure others will follow this trend. MLC costs a lot less than SLC (at least 50%) and SSD is known for its huge READ IOPS performance, so it may be a good alternative for your database, exchange type of appilcations.

Does it make sense to use “not so reliable” MLC SSD for enterprise storage? Well, it really depends on your situation. Hitachi is the first vendor launching eMLC SSD, I am sure others will follow this trend. MLC costs a lot less than SLC (at least 50%) and SSD is known for its huge READ IOPS performance, so it may be a good alternative for your database, exchange type of appilcations. The first release of HIT/VE (Version 3.0.1) was back in April 2011, it did make storage administrator’s life a lot easier particularly if you are managing hundreds of volumes. Well, to be honest, I don’t mind creating volumes, assigning them to ESX host and setting the ACL manually, it only takes a few minutes more. I feel that I need to know every step is correctly carried out which is more important because I can control everything, obviously, I don’t have a huge SAN farm to manage, so HIT/VE is not a life saver tool for me.

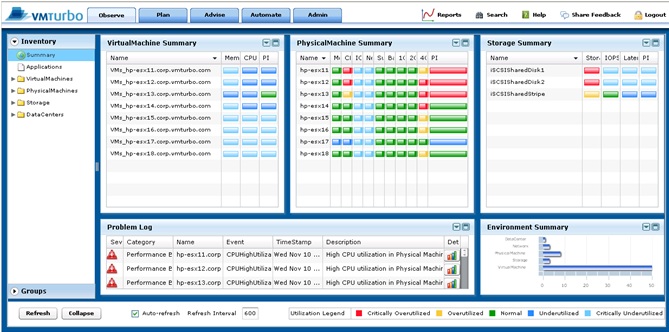

The first release of HIT/VE (Version 3.0.1) was back in April 2011, it did make storage administrator’s life a lot easier particularly if you are managing hundreds of volumes. Well, to be honest, I don’t mind creating volumes, assigning them to ESX host and setting the ACL manually, it only takes a few minutes more. I feel that I need to know every step is correctly carried out which is more important because I can control everything, obviously, I don’t have a huge SAN farm to manage, so HIT/VE is not a life saver tool for me. If you have already got Veeam’s Monitor (Free Version), then this is the one that you shouldn’t omit. It’s a compliment to Veeam monitoring tool where you can use VMTurbo Community Version (Monitor+Reporter) to see those details are only available for the Veeam paid version. Furthermore, it clearly displays a lot of very useful information such as IOPS breakdown of individual VMs.

If you have already got Veeam’s Monitor (Free Version), then this is the one that you shouldn’t omit. It’s a compliment to Veeam monitoring tool where you can use VMTurbo Community Version (Monitor+Reporter) to see those details are only available for the Veeam paid version. Furthermore, it clearly displays a lot of very useful information such as IOPS breakdown of individual VMs.![dellstorage082211[1] dellstorage082211[1]](http://www.modelcar.hk/wp-content/uploads/2011/08/dellstorage0822111.png)

![nutanix_appliannce_architecture[1] nutanix_appliannce_architecture[1]](http://www.modelcar.hk/wp-content/uploads/2011/08/nutanix_appliannce_architecture1.jpg)