Scale Out 磁碟陣列擴充彈性大解放 (轉文)

From iT home Taiwan, it seemed Equallogic is no longer the only player who can provide Scale-Out iSCSI SAN as well as Auto Tiering.

Scale Out不僅在針對檔案儲存服務的NAS產品領域大行其道,在提供區塊儲存服務的磁碟陣列領域亦復如是,這次我們將介紹Scale-Up擴充功能的Scale-Out式SAN儲存設備現況,並藉由實測一款普安ESVA磁碟陣列,進一步了解這類型產品的特性。

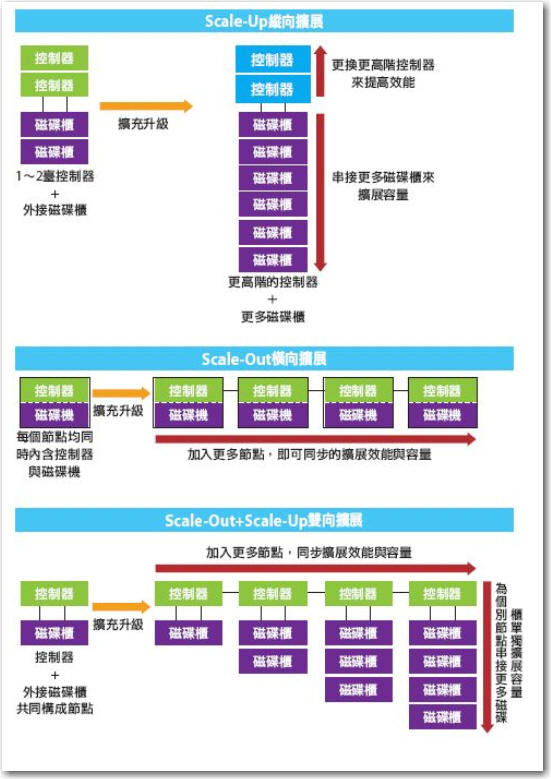

Scale Out結合Scale Up 磁碟陣列擴展概念

Scale Out擴充架構是現在的顯學,不過傳統Scale Up架構亦有容量擴展更便利的優點,許多Scale Out磁碟陣列產品也保留了Scale Up擴充功能,可更靈活的滿足不同用戶的擴充需求。

由多個節點組成叢集共同提供服務,並在需要時,以增加節點方式擴充系統能力的Scale-Out擴展概念,不僅由來已久,也普遍被應用在許多領域,從高效能運算用伺服器、儲存設備、網路設備,到資安設備等,都可見到這種做法。

近年來,為了更靈活的因應資料量持續暴漲所帶來的資料處理與存放需求,Scale-Out架構更成了當前儲存領域的「顯學」,只需增加更多節點,就能同步擴展系統效能與容量,而無須替換掉既有的節點設備。

我們曾在第565期的NAS採購大特輯與第570期封面故事中,介紹過採用Scale-Out架構的NAS產品,這次我們則將把目光轉到儲存設備的另外一大類型–SAN儲存設備上,介紹同樣採用Scale-Out架構,但以提供區塊(Block)存取服務為目的的SAN儲存產品。

兩種Scale-Out區塊儲存設備類型

對於提供檔案存取服務的NAS設備來說,主要是透過在個別NAS作業系統上安裝叢集檔案系統,來達到提供Scale-Out擴展能力的目的,除了商售產品外,目前還有許多開源的叢集檔案系統,可讓用戶自行建置具備Scale-Out特性的NAS。

不過在SAN儲存設備領域,就沒有這類方便的開源管道可用。目前的Scale-Out架構SAN儲存設備,基本上都是由個別廠商自行開發、專以提供區塊存取服務為目的、支援多節點平行處理的專屬分散式作業系統,搭配專屬硬體所構成,大致可分為兩種典型架構:

一是採用分散式快取記憶體統一編址架構,運作方式類似多處理器平行處理領域的NUMA(非一致性記憶體存取,Non-Uniform Memory Access)架構,也就是將分散在各節點上的快取記憶體統一編址,讓各節點的記憶體透過映射被結合為一個大型、連續的全域記憶體,各節點的控制器可利用專屬的內部交換網路,互相存取彼此的快取記憶體與後端磁碟空間。

由於必須採用基於專門設計、成本也十分高昂的控制器-記憶體互連匯流排硬體,這種NUMA Scale-Out架構的SAN儲存設備大都是高階產品,如EMC的Symmetrix VMAX、HDS VSP、HP 3PAR的T系列等。

另一種Scale-Out架構是MPP(大型平行處理器,Massive Parallel Processors)平行處理架構,各節點有各自獨立的CPU與區域快取記憶體,再透過外部網路彼此互連,系統底層則藉由儲存虛擬化技術,把邏輯磁碟區的區塊打散到每個節點的磁碟空間上,因而前端主機存取磁碟區時,便能利用到所有節點的I/O效能。

MPP架構可使用標準化的硬體與網路設備組成節點,成本相對較低,採用這種Scale-Out架構的SAN儲存設備大都為中階型產品,如HP的LeftHand P4000系列、IBM XIV系列、Dell Equallogc的PS系列,以及國內普安公司推出的ESVA系列等。

無論是NUMA或MPP式架構的Scale-Out磁碟陣列產品,都具備了整體效能隨著節點數增加而線性增長的特性。用戶可從少數節點起步,日後隨著應用規模的擴大,再藉由增加更多節點,同步的擴展效能與儲存空間。

相較下,傳統的雙控制器模組化磁碟陣列,控制器本身的處理器、匯流排與傳輸介面規格,就決定了系統的最大處理效能與傳輸頻寬上限,雖然可藉由串接更多JBOD磁碟櫃來擴充儲存容量,但除非換掉整臺控制器,否則效能無法提升。這種只能串接磁碟櫃擴充容量、必須藉由更換更高階控制器才能升級效能的架構,也就被稱作「Scale-Up」綜向式擴展。

對純粹的Scale-Out架構來說,每個節點都含有控制器與磁碟空間,每增加一個節點,系統的處理效能與容量都將同步的增加。不過問題在於,多數情況下,用戶對於儲存空間的需求,較存取效能的需求更為迫切,許多時候用戶只是單純的需要增加儲存容量,而沒有同步提高存取效能的需求。

對這些只需要更多容量的用戶來說,Scale-Out式擴充便顯得有些「奢侈」,儘管只有單純的容量擴充需求,但Scale-Out所增加的新節點雖然能提供更多儲存空間,卻同時也連帶包含了用戶暫時還不需要的額外控制器效能,以這種方式來取得額外容量,顯得成本過高。

相較下,傳統的Scale-Up式雙控制器磁碟陣列,反而更能因應這種只需要擴充容量、而不需要「同步擴展容量與效能」的情況,只要在後端外接JBOD磁碟櫃就能提供更多容量,而典型的JBOD磁碟櫃是一種僅含磁碟機、背版、SAS或FC連接介面與電源的單純裝置,必須藉由前端的控制器才能提供存取服務,但成本也遠低於同時含有控制器與磁碟機的完整儲存節點。

於是為了兼顧更多樣化的用戶需求,便有一些Scale-Out儲存設備也同時提供了Scale-Up升級能力,允許個別節點透過SAS或FC埠,在後端串接JBOD磁碟櫃,單獨擴充容量而無需同步擴充效能。

換言之,對這種兼具Scale-Up擴充特性的Scale-Out儲存設備來說,控制器節點與磁碟櫃單元是彼此獨立的,可各自獨立擴充,用戶可選擇只增添控制器或是只增添磁碟櫃。當用戶有擴充效能的需求、或同時需要擴充效能與容量時,可以選擇新增控制器節點。若用戶只有單純的擴充儲存空間的需求,可以選擇在某個節點上串接JBOD磁碟櫃。

因此兼具縱向與橫向、雙向擴充能力的Scale-Out+Scale-Up機型,顯然比純粹的Scale-Out,或是純粹的Scale-Up架構,能更精準、且更具彈性的滿足用戶需求。

Scale-Up、Scale-Out與Scale-Out+Scale-Up

Scale-Up擴充架構必須更換整臺控制器才能提高效能,效能提升缺乏彈性,成本也高,不過只要串接簡單的JBOD磁碟櫃,就能擴充容量,容量提升較為簡單。

Scale-Out擴充架構只要增加節點數量,就能同步的擴展效能與容量,無須替換原有控制器,效能升級較為方便與彈性。不過對於單純只需擴充容量的用戶來說(用戶只想增加磁碟空間,而不需要增加控制器效能),必須增加一整個節點才能取得額外容量的作法,顯得成本過高。

Scale-Out+Scale-Up雙向擴充架構則兼具前兩者之長,而無兩者之短。當用戶有擴充效能的需求、或同時需要擴充效能與容量時,可以選擇新增控制器節點。若只有單純的擴充儲存空間的需求,可以選擇在某個節點上串接JBOD磁碟櫃。

英特爾:2015年將是Scale-out儲存的天下

英特爾預言,Scale-out儲存系統將取代現有的Scale-up架構,並在2015年達到8成全球儲存網路市占率,解決巨量資料挑戰

整體而言,英特爾將企業儲存系統從過去到未來的演進分為3階段,分別為Scale-up架構、Scale-out架構,以及無所不在儲存服務(Ubiquitous Storage)。英特爾儲存事業部總經理David Tuhy表示,目前Scale-out架構正在取代現有的Scale-up架構,全球市占率將於2015年達到8成,成為主流的儲存架構。

儲存從Scale-up轉Scale-out,但高價仍是普及瓶頸

第一種是目前最普遍的Scale-up架構儲存系統,在這樣的架構之下,企業只能利用單臺安裝了儲存系統的控制器來擴充儲存容量,因而受限於單臺控制器的硬體規格,萬一儲存容量暴增,以至於超過單臺控制器的極限,企業就必須汰換舊有設備,新採購更高硬體規格的控制器。此外,全套儲存系統都要透過內網互相連結,導致企業難以跨不同儲存系統集中控管與調配資源。

基於這些特性,Scale-up儲存系統經常造成企業彈性擴充與管理上的限制。英特爾舉例,Scale-up架構難以在公有雲與私有雲資料中心之間傳遞資料,而且不利於快速擴充,若企業未來要擴充至PB等級的儲存容量,則被迫要不斷投資儲存的硬體設備。此外,IT人員只能獨立管理不同套的儲存系統,難以透過單一平臺集中控管多套系統,無法規畫為儲存資源池,來有效配置儲存資源。

為了解決Scale-up儲存系統的限制,Scale-out架構應運而生,而且英特爾認為,現階段Scale-out正在逐步取代Scale-up儲存系統。Scale-out儲存系統不受限於單臺控制器節點的硬體規格,每臺安裝儲存系統的控制器將如同資料中心儲存網路的模組化元件,透過外部網路互相連結,可視為單一套儲存系統來集中控管。更重要的是,當未來企業要擴充儲存容量時,只要添購儲存控制器的數量,就能繼續擴充同一套儲存系統的容量。

而且每臺控制器提供如同一臺伺服器的規格,來執行更高階的管理功能,包括熱抽換、壓縮、精簡配置(Provisioning)、資料重複刪除等,讓企業集中控管所有控制器節點的運作,利於企業擴充、配置儲存資源。

不過,目前Scale-out儲存系統的初期採購成本過高,導致企業難以普遍採用。臺灣IDC伺服器與儲存分析師高振偉表示,Scale-out儲存系統架構需要許多搭載更高規格處理器的控制器,才能提供高階的擴充與管理功能,導致整套Scale-out儲存系統的價格居高不下。此外,企業導入Scale-out儲存系統時,通常必須重新規畫儲存網路架構,因而需要搭配廠商的系統整合服務,來整合既有的儲存資源,這也成為另一筆成本負擔。

高振偉表示,近兩年來,主要是HP、IBM等大廠推出Scale-out儲存系統,目前台積電、聯發科等高科技製造業,以及教育研究機構、政府單位等產業陸續導入。這些企業主要是看重Scale-out架構的擴充彈性,不僅用來儲存與日俱增的結構化資料庫,高科技業者還用來儲存大量非結構化的客戶資料與晶圓設計資料,利於快速擴充儲存容量。他坦言,目前較小的儲存廠商仍主打傳統Scale-up產品,明年才會陸續推出Scale-out產品,可望壓低價格,降低企業的導入門檻。

到了2015年,儲存系統將基於Scale-out儲存系統,來提供Ubiquitous無所不在的儲存服務,將能夠支援混合雲型態、自動化與終端覺知。

透過這3項特性,企業的資料可以安全地在公、私有雲端基礎架構運作,並提供自動化管理功能,包括動態擴充、搜尋、復原系統內的資料等。此外,儲存系統還會具備終端覺知的功能,由於使用者的各種終端裝置將進行大量的資料產出與取用,像是上網、拍照、看影片等,不僅造成資料量暴增,還必須快速地存取與傳遞這些資料。因此,儲存系統必須有效控管終端裝置的資料產出與使用,才能實現無所不在的儲存服務。

既有主要Scale-Out SAN儲存設備概覽

目前包括EMC、HDS、IBM、HP與Dell在內的跨國大廠,都已陸續為旗下的中階與高階SAN磁碟陣列產品線,納入了Scale-Out架構產品,另外臺灣本土的普安,與中國的華為、龍存等廠商,也能提供Scale-Out架構產品線,用戶的選擇已相當多。

而這眾多Scale-Out產品中,多數也提供了Scale-Up縱向擴展能力,可向用戶提供更靈活、更多樣化的擴充選擇。以下我們便簡單介紹幾款在臺灣能找到的產品。

EMC Symmetrix VMAX

VMAX是EMC用於接替老牌的Symmetrix DMX系列高階磁碟陣列的新一代旗艦產品,如同產品名稱所示,新的VMAX系列捨棄了先前DMX的直連矩陣(Direct Matrix)架構,改用新的虛擬矩陣(Virtual Matrix)架構。

VMX的控制器基本單元稱為VMAX引擎,每個VMAX引擎都含有Intel Xeon處理器、快取記憶體與前、後端I/O介面。多個VMAX引擎之間可透過稱為RapidIO的內部網路彼此互連,共享快取記憶體與後端儲存資源。用戶只要增加VMAX引擎數量,就能擴展整個VMAX的處理效能與傳輸頻寬,這也就是Scale-Out橫向擴展。

除此之外,VMAX也能提供Scale-Up縱向擴展能力。由於VMAX的磁碟空間是由獨立的DAE磁碟櫃提供,用戶可從連接1組DAE磁碟櫃起步,視需求單獨加入更多磁碟櫃來提供更多空間,藉由靈活的組合不同數量的VMAX引擎與DAE磁碟櫃,來滿足不同的效能與空間需求。

不同等級的VMAX機型,允許的Scale-Out與Scale-Up擴展能力各有不同,如最低階的VMAX 10K允許最多4組VMAX引擎與1,560臺硬碟,最高階的VMAX 40K則允許最多8組VMAX引擎與3,200臺硬碟。

HDS VSP

VSP是HDS繼USP系列後推出的新一代旗艦產品,相較於採用傳統Scale-Up架構的USP系列,新的VSP引進了新的Scale-Out架構。

VSP的控制器把所有主要單元,都作成互相分離、且可抽換的板卡模組型式,包括提供處理器的虛擬儲存導向器(VSD)、提供快取記憶體的快取記憶體卡(DCA)、提供前端主機介面的前端導向器(FED)、提供後端磁碟機介面的後端導向器(BED),以及讓各控制器單元互連的網格交換器(GSW)等單元,每種單元都可各自獨立的擴充。

這也就是說,VSP控制器內的各個單元,各自都可以Scale-Out方式橫向擴充,用戶可藉由在VSP機櫃的控制器機箱中,安插不同數量的VSD、DCA、FED與BED模組板卡,組合成擁有不同等級I/O處理與傳輸能力的控制器。

每個VSP控制器機箱最多可安裝4個VSD模組、8個DCA模組、8個FED模組、4個BED模組與4個GSW模組,且每套系統最大可擴充到2套控制器機箱,所以前述單元的擴充數量上限還會加倍。

除了控制器內的各個單元可視需要以Scale-Out型式組合與擴充,VSP控制器後端也可視用戶需要,連接不同類型與數量的磁碟櫃,也就是以類似傳統Scale-Up擴展方式,藉由單獨增添新磁碟櫃來擴充容量。

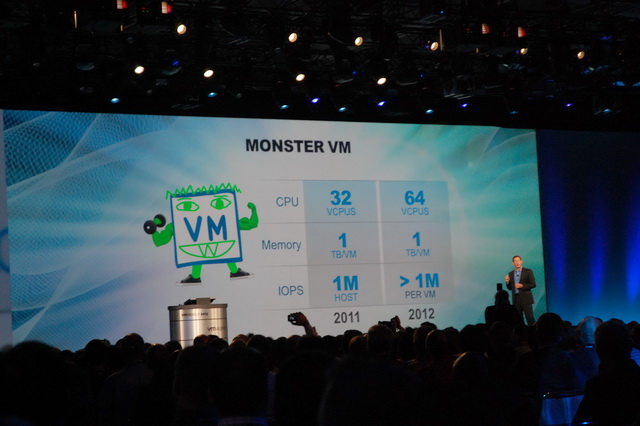

IBM XIV

XIV是IBM新一代的中高階SAN磁碟陣列,基本單元稱為資料模組(Data Module),每個資料模組其實就是一臺以Linux為基礎的x86架構儲存伺服器,含有Intel 四核心處理器、24GB記憶體與12臺SATA硬碟。用戶可藉由連接多組資料模組,以Scale-Out的型式同步擴展系統的整體I/O處理能力、傳輸頻寬與儲存容量。

與其他同屬MPP架構的Scale-Out產品相較,IBM XIV一大不同之處在於採用非對稱式節點架構,所有資料模組(節點)中,有一部分兼有負責連接前端主機的介面模組角色,含有連接前端主機用的FC埠,以及與其他資料模組互連用的傳輸埠。剩餘的節點則是單純的資料模組,只含有與其他資料模組互連用的傳輸埠。相較下,其他MPP式Scale-Out產品如Equallogic PS系列、LeftHand P4000及普安ESVA都採用對稱式架構,所有模組都是對等、相同的。

前幾代的XIV,資料模組與資料模組之間,是以一般的GbE網路互連,最新的第三代XIV改用高頻寬的InfiniBand作為節點間的互連網路,單一系統最多可擴展到15臺資料模組。不過由於XIV不提供獨立、不含控制器的JBOD磁碟櫃選項,擴充時是以一臺同時含有控制器與12臺硬碟的資料模組節點為單位,不具備單獨擴充磁碟的Scale-Up擴充能力。

Dell Equallogic PS系列

Equallogic的PS系列,是最早導入Scale-Out架構的中階SAN磁碟陣列產品之一。每個PS系列節點都是一臺含有雙控制器,且內含12、24或48臺硬碟的iSCSI磁碟陣列,利用Scale-Out方式可支援最多16個節點。每臺PS系列的節點雖然都有雙控制器,不過Equallogic只提供Active-Standby模式,所以實際上每組節點只有一臺控制器可用。

與IBM XIV相似,Equallogic PS系列的擴充也是以一臺含有雙控制器與12/24/48臺硬碟的完整磁碟陣列為單位,沒有不含控制器的單純JBOD磁碟櫃選項,用戶無法以Scale-Up的方式單獨擴充磁碟空間。

HP 3PAR

3PAR是Scale-Out磁碟陣列產品的先驅者之一,當3PAR被併入HP旗下後,目前HP這條產品線一共有3PAR原有的F-Class、T-Class,與新推出的P10000 V系列等三個產品系列,用戶可透過增加控制器節點的Scale-Out擴充方式,增加整個系統的處理能力與傳輸頻寬,入門級的F-Calss可選擇擴充到2~4組控制器節點,T-Class與P10000 V系列則可擴充到2~8組控制器節點。

較早期的T-Class在控制器與控制器之間,是利用背板上的PCI-X介面以網狀方式彼此互連,最新的P10000 V系列背板則改用頻寬更大的PCIe介面。每組控制器的機箱內都提供了擴充插槽,可視需要安裝不同型式、數量的主機端連接介面卡(FC或iSCSI)或後端磁碟連接介面卡(FC)。

由於HP 3PAR的儲存空間是由獨立的FC介面磁碟櫃來提供,所以允許以Scale-Up方式,為個別控制器節點增添串接的磁碟櫃,以便單獨的擴展容量。

HP LeftHand P4000

P4000是HP透過併購LeftHand取得的iSCSI磁碟陣列產品線,這系列產品的核心是稱為SAN/iQ的虛擬化軟體,每組P4000控制器節點,實際上就是安裝了SAN/iQ軟體的HP Proliant伺服器,P4000系列目前已經發展到第二代的G2,有2U機箱的P4300 G2與P4900 G2、4U機箱的P4500 G2與刀鋒式機箱的P4800 G2等多種款式,一組機箱或一個刀鋒模組就是一臺控制器節點。每種款式都可由最小2個控制器節點起步,以Scale-Out方式擴展到最大32個控制器節點。

除了刀鋒型式的P4800 G2外,所有機架式機箱的P4000系列節點都採用控制器結合磁碟機箱的型式,控制器節點兼具提供儲存空間的功能,每組控制器機箱都內含一定數量的硬碟(8或12臺),擴充時也必須以一整臺控制器機箱為單位,沒有單獨擴充磁碟空間的獨立JBOD磁碟櫃選項,所以也就沒有Scale-Up擴充能力。

普安ESVA

ESVA是普安公司推出的中階SAN磁碟陣列產品家族,也是臺灣本土廠商研發的第一款Scale-Out式SAN儲存設備。目前ESVA家族已經發展到第3代,除了可藉由Scale-Out方式同步擴充效能與容量外,也提供以Scale-Up方式單獨擴充容量的功能。

ESVA的控制器節點是內含雙控制器與16個硬碟槽的3U機箱設備,藉由Scale-Out擴展可讓最多12臺ESVA控制器節點(一共有24組控制器)組成單一系統。另外每組ESVA控制器都含有用於外接JBOD磁碟櫃的SAS擴充埠,因此用戶也可以為個別控制器串接JBOD磁碟櫃,單獨地擴展磁碟容量。

Oracle Pillar Axiom 1600

這是Oracle併購Pillar Axiom後取得的中階儲存產品線。

Pillar Axiom 600基本單元是採用FC、SSD或SATA硬碟的Brick磁碟模組,搭配Slammer控制器模組組成。

每個Brick磁碟模組本身擁有2組RAID控制器,但並不直接向終端用戶提供儲存空間,而是透過Slammer控制器的中介,由將空間提供給前端用戶使用。每組Slammer控制器也內含兩組控制器,一共有有FC SAN、iSCSI SAN、FC+iSCSI SAN與NAS等4種Slammer控制器型式可供選擇。

以Brick磁碟模組搭配不同型式的Slammer控制器模組,便能提供不同類型的儲存服務。前端的多組Slammer控制器與後端的多臺Brick磁碟模組之間,透過FC網路形成的網狀連接,任一Slammer控制器都能存取後端任一Brick模組的空間。

Pillar Axiom 600的架構相當特別,典型的磁碟陣列通常是由控制器與JBOD磁碟櫃組成,控制器負責提供I/O處理、RAID運算、快取記憶體以及與前端主機連接的介面,後端的JBOD磁碟櫃則只單純提供磁碟空間。

Pillar Axiom 600則把傳統控制器的工作拆成兩部分,RAID運算功能由底層Brick磁碟模組內含的RAID控制器負責,至於I/O處理、快取記憶體以及與前端主機的連接等功能,則由Slammer控制器負責。

所以Brick磁碟模組不是簡單的JBOD磁碟櫃, Slammer控制器也不是傳統意義上的磁碟陣列控制器,而是由Slammer控制器結合Brick磁碟模組內的控制器,分工完成完整的I/O處理作業。

Slammer控制器與Brick磁碟模組都可以Scale-Out方式橫向擴充,一套Axiom最多可有4組Slammer控制器與64組Brick磁碟模組。兩種單元的擴充是互相獨立的,可以只單獨的增添Slammer或Brick模組。每組Slammer控制器都有26個用於連接後端Brick磁碟模組的FC埠,因此用戶能為個別Slammer控制器,連接更多的Brick磁碟模組,來增加儲存容量,以Scale-Up的方式擴展容量。