I found an excellent article on ITHome (Taiwan) regarding the latest trend in Tiered Enterprise Storage with SSD, but it’s in Chinese, you may need Google Translate to read it.

![68700_4_3_l[1] 68700_4_3_l[1]](http://www.modelcar.hk/wp-content/uploads/2011/07/68700_4_3_l1.jpg)

Update:

Aug-17-2011

There is new topic about Distributed SSD Cache in Enterprise SAN and Server, new SSD caching products are coming from EMC, NetApps, but where is Equallogic in this area?

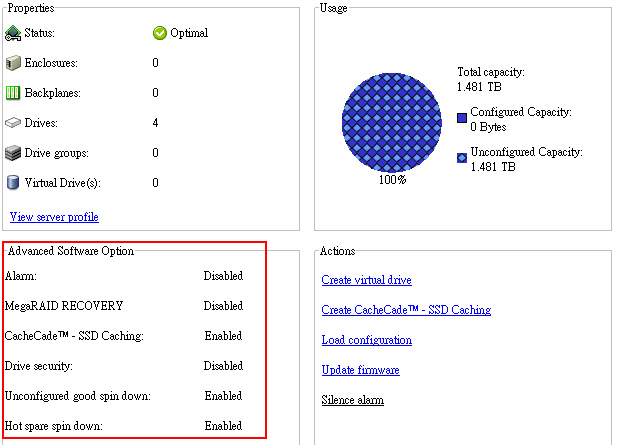

Also Dell just released its SSD solution (CacheCade) for H700 H800 raid card with 1GB NVRam, but the cost is quite expensive, not worth the money really. (as one 149GB Solid State Drive SAS 3Gbps 2.5in HotPlug Hard Drive,3.5in HYB CARR [US$4,599.00])

CacheCade is used to improve random read performance of the Hard Disk Drive (HDD) based Virtual Disks. A solid-state drive (SSD) is a data storage device that uses solid-state memory to store persistent data. SSDs significantly increase the I/O performance (IOPS) and/or write speed in Mbps from a storage device. With Dell Storage Controllers, you can create a CacheCade using SSDs. The CacheCade is then used for better performance of the storage I/O operations. Use either Serial Attached SCSI (SAS) or Serial Advanced Technology Attachment (SATA) SSDs to create a CacheCade.

Create a CacheCade with SSDs in the following scenarios:

Maximum application performance—Create a CacheCade using SSDs to achieve higher performance without wasted capacity.

Maximum application performance and higher capacity—Create a CacheCade using SSDs to balance the capacity of the CacheCade with high performance SSDs.

Higher capacity—If you do not have empty slots for additional HDDs, use SSDs and create a CacheCade. This reduces the number of HDDs required and increases application performance.

The CacheCade feature has the following restrictions:

Only SSDs with the proper Dell identifiers can be used to create a CacheCade.

If you create a CacheCade using SSDs, the SSD properties are still retained. At a later point of time, you can use the SSD to create virtual disks.

A CacheCade can contain either SAS drives or SATA drives but not both.

Each SSD in the CacheCade does not have to be of the same size. The CacheCade size is automatically calculated as follows:

CacheCade size =capacity of the smallest SSD * the number of SSDs

The unused portion of SSD is wasted and can not be used as an additional CacheCade or an SSD-based virtual disk.

The total amount of cache pool with a CacheCade is 512 GB. If you create a CacheCade which is larger than 512 GB, the storage controller still uses only 512 GB.

The CacheCade is supported only on Dell PERC H700 and H800 controllers with 1 GB NVRAM and firmware version 7.2 or later.

In a storage enclosure, the total number of logical devices including virtual disks and CacheCade(s) cannot exceed 64.

NOTE: The CacheCade feature is available from first half of calendar year 2011.

NOTE: In order to use CacheCade for the virtual disk, the Write and Read policy of the HDD based virtual disk must be set to Write Back or Force Write Back and read policy must be set to Read Ahead or Adaptive Read Ahead.

Aug-24-2011

I’ve found out only H700 H800 raid card with 1GB NVRam supports FastPath and CacheCade, unlike the LSI product which requires a hardware or software key, Dell’s LSI OEM H700/H800 provides those free of charge!!!

In additional, if you tired of using OpenManage to manage your storage, you can try LSI’s Magaraid Storage Manager (aka MSM) which is a client based GUI tool like the previous Array Manager.

However, this still leave with one big questions even you have a H800 w. 1GB NVCache as it only supports SAS interface on Powervault MD1200/MD1220. Where can we find a cheap compatible SAS SSD for alternative? (OCZ still costs a lot), if you know, please let me know.

For those of you who are interested in enabling H700 with cheap SATA SSD for CacheCade and FastPath, here is the How To Link, but it’s in Chinese.

Aug-27-2011

CacheCade software enables SSDs to be configured as a secondary tier of cache to maximize transactional I/O performance. Adding one SSD to substantially improve IOPs performance as opposed to adding more hard drives to an array.

By utilizing SSDs in front of hard disk drives (HDDs) to create a high-performance controller cache of up to 512GB, CacheCade allows for very large data sets to be present in cache and deliver up to a 50X performance improvement in read-intensive applications, such as file, Web, OLTP and database server.

The solution is designed to accelerate the I/O performance of HDD-based arrays while minimizing investments in SSD technology.

CacheCade CharacteristicsThe following list contains various characteristics of CacheCade technology:

- A CacheCade virtual disk cannot be created on a controller where the CacheCade feature is disabled. Only controllers with a 1GB NVDIMM will have the CacheCade feature enabled.

- Only SSDs that are non-foreign and in Unconfigured Good state can be used to create a CacheCade virtual disk.

- Rotational drives cannot be used to create a CacheCade virtual disk.

- Multiple CacheCade virtual disks can be created on a controller. Although there is no benefit in creating multiple CacheCade virtual disks.

- The total size of all the CacheCade virtual disks will be combined to form a single secondary cache pool. However the maximum size of the pool will be limited to 512GB.

- Virtual disks containing secured Self-Encrypting Disks (SEDs) or SSDs will not be cached by CacheCade virtual disks.

- CacheCade is a read cache only. Write operations will not be cached by CacheCade.

- IOs equal to or larger than 64KB are not cached by CacheCade virtual disks.

- A foreign import of a CacheCade virtual disk on a controller with the CacheCade feature disabled will fail.

- A successfully imported CacheCade VD will immediately start caching.

- CacheCade VDs are based on R0. As such the size of the VD will be the number of contributing drives x the size of the smallest contributing drive.

- In order to use CacheCade for the virtual disk, the Write and Read policy of the HDD based virtual disk must be set to Write Back or Force Write Back and read policy must be set to Read Ahead orAdaptive Read Ahead.

- CacheCade has NO interaction with the battery learn cycle or any battery processes. The battery learn behavior operates completely independent of CacheCade. However, during the battery learn cycle, when the controller switches to Write-Through mode due to low battery power, CacheCade will be disable.

NOTE:

Any processes that may force the controller into Write-Through mode (such as RAID Level Migration and Online Capacity Expansion) will disable CacheCade

Reconfiguration of CacheCade Virtual Disks

A CacheCade virtual disk that is made up of more than one SSD will automatically be reconfigured upon a removal or failure of a member SSD.

The virtual disk will retain an Optimal state and will adjust its size to reflect the remaining number of member disks.

If auto-rebuild is enabled on the controller, when a previously removed SSD is inserted back into the system or replaced with a new compatible SSD, the CacheCade will once again be automatically reconfigured and will adjust its size to reflect the addition of the member SSD.

Sep-4-2011

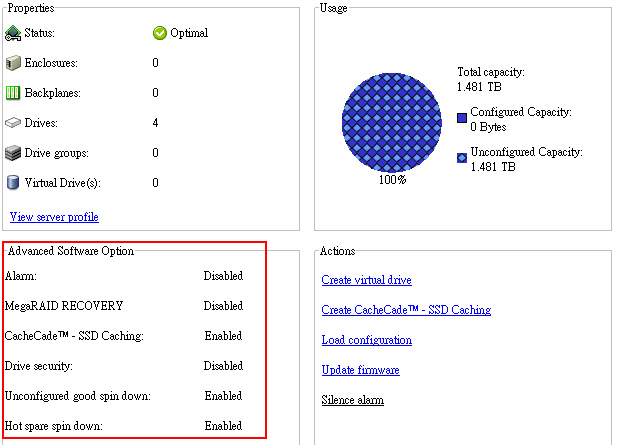

LSI Corp. today updated its MegaRAID CacheCade software to support write and read solid state drive (SSD) caching via a controller, providing faster access to more frequently used data.

LSI MegaRAID CacheCade Pro 2.0 speeds application I/O performance on hard drives by using SSDs to cache frequently accessed data. The software is designed for high-I/O, transaction-based applications such as Web 2.0, email messaging, high-performance computing and financials. The caching software works on LSI MegaRAID 9260, 9261 and 9280 series of 6 GB SATA and SAS controller cards.

LSI delivered the first version of CacheCade software about a year ago with read-only SSD caching. MegaRAID CacheCade Pro 2.0 is priced at $270 and is available to distributors, system integrators and value added resellers. LSI’s CacheCade partners include Dell, which in May began selling the software with Dell PowerEdge RAID Controller (PERC) H700 and H800 cards.

“What we want to do is close the gap between powerful host processors and relatively slow hard disk drives,” said Scott Cleland, LSI’s product marketing manager for the channel. “Hosts can take I/O really fast, but the problem is traditional hard disk drives can’t keep up.”

LSI claims the software is the industry’s first SSD technology to offer both read and write caching on SSDs via a controller.

LSI lets users upgrade a server or array by plugging in a controller card with CacheCade. Cleland said users can place hot-swappable SSDs into server drive slots and use LSI’s MegaRAID Storage Manager to create CacheCade pools. The software will automatically place more frequently accessed data to cache pools.

“In traditional SSD cache and HDD [hard disk drive] configurations, the HDDs and SSDs are exposed to the host,” Cleland said. “You have to have knowledge of the operating system, file system and application. With CacheCade, the SSDs are not exposed to the host. The controller is doing the caching on the SSDs. All the I/O traffic is going to the controller.”

SSD analyst Jim Handy of Objective Analysis said it took time for LSI to build in the write caching capability because “write cache is phenomenally complicated.”

With read-only cache, data changes are copied in the cache and updated on the hard drive at the same time. “If the processor wants to update the copy, then the copy in cache is invalid. It needs to get the updated version from the hard disk drive,” Handy said of read-only cache.

For write cache, the data is updated later on the hard drive to make sure the original is still updated when the copy is deleted from cache.

LSI also has a MirrorCache feature, which prevents the loss of data if it is copied in cache and not yet updated on the hard drive.

Handy said read and write caching is faster than read-only caching.

“Some applications won’t benefit from [read and write caching],” Handy said. “They won’t notice it so much because they do way more reads than writes. For instance, software downloads are exclusively reads. Other applications, like OLTP [online transition processing], use a 50-50 balance of read-writes. In these applications, read-write is really important. ”

Sep-5-2011

The SSD Review did an excellent research in their latest release “LSI MegaRAID CacheCade Pro 2.0 Review – Total Storage Acceleration Realized“

![68700_4_3_l[1] 68700_4_3_l[1]](http://www.modelcar.hk/wp-content/uploads/2011/07/68700_4_3_l1.jpg)