This happened two days ago and followed by a very happy ending.

Around 8AM, I received an SMS alert saying one of the email server isn’t responding, then it followed with many emergency calls from clients on that email server (obvious…haha).

I logged into vCenter and found the email server had a question mark on top of its icon, shortly I realized the EQL volume it sits on is full as SANHQ also generated a few warning as well the previous days, but I was too busy and too over confident to ignore all the warnings and thinking it may get through over the weekend.

The solution is quite simply. First increase the Thin Provisioned EQL volume size, then extend the existing volume in vCenter, next simply answer the question on the stunned (ie, suspended) VM to Retry again, Woola, it’s back to normal again, no need to restart or shutdown the VM at all.

This is really beautiful! I was a bit depressed when knowing it will only work with ESX 5.0 previously and this was also confirmed by a Dell storage expert, then found out the The VMware’s Thin Provision Stunning feature will work with ESX 4.1 again the other day from Dell’s official document, I was completely confused as I do not know who’s right until two days ago.

FYI, I had a nasty EQL Thin Provisioned volume issue last year (EQL firmware was 4.2), the whole over-provisioned thin volume simply went offline when it reached the maximum limit and all my VMs on that volume crashed and need to restart manually even after extending the volume in EQL and vCenter.

No more worries, thank you so much Equallogic, you really made my day!

Finally, some those who may be interested to know why I didn’t upgrade to ESX 5.0? Why? Why should I? Normally I will wait for a major release like ESX 3.5 or ESX 4.1 before making the big move.

There is another issue as the latest Veeam Back & Replication 6.0 still have many minor problems with ESX 5.0 and frankly speaking, I don’t see many advantage moving to ESX 5.0 while my ESX 4.1 environment is so rocket solid. The new features such as Storage DRS, SSD caching or VSSA are all minor stuffs comparing with VAAI and iSCSI multipathing, thin provisioning in ESX 4.1. In additional, the latest EQL firmware features always mostly backward compatible with older ESX version, so that’s why I still prefer to stay at ESX 4.1 at least for another year.

Oh…one thing I missed that is I really do hope EQL firmware 5.1 Thin Reclaim can work on ESX 4.1, but it seemed it’s a mission impossible, never mind, I’ve got plenty of space, moving a VM off a dirty volume isn’t exactly a big deal, so I can live with it and manually create a new clean volume.

Update Jan 17, 2012

Today, I received the latest Equallogic Newsletter and it somehow also indicates this VMware Thin Provisioning Stun feature is supported with ESX 4.1, hope it’s not a typo.

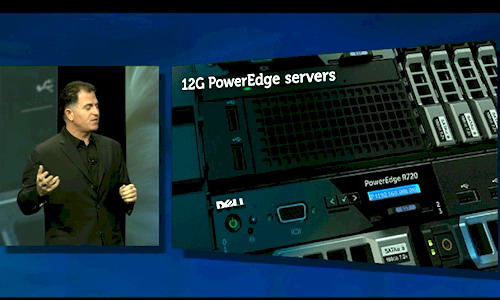

Dell EqualLogic Firmware Maintenance Release v5.1.2 (October 2011)

Dell EqualLogic Firmware v5.1.2 is a maintenance release on the v5.1 release stream. Firmware v5.1 release stream introduces support for Dell EqualLogic FS7500 Unified Storage Solution, and advanced features like Enhanced Load Balancing, Data Center Bridging, VMware Thin Provisioning awareness for vSphere v4.1, Auditing of Administrative and Active Directory Integration. Firmware v5.1.2 is a maintenance release with bug fixes for enhanced stability and performance of EqualLogic SAN.

Update Mar 5, 2012

I found something new today that Thin Provisioning Stun is actually a hidden API in ESX 4.1 and apparently there are only two storage vendors support it in ESX 4.1, one being Equallogic, no wonder this feature worked even with firmware v5.0.2, as I thought at least v5.1 is required. Thanks EQL, so this gives me a bit more time to upgrade to FW5.1 or even FW5.2.

Thin Provisioning Stun is officially a vSphere 5 VAAI primitive. It was included in vSphere 4.1 and some array plugins support it, but it was never officially listed as a vSphere 4 primitive.

Out of Space Condition (AKA Thin Provisioning Stun)

Running out of capacity is a catastrophe, but it’s easy to ignore the alerts in vCenter until it’s too late. This command allows the array to notify vCenter to “stun” (suspend) all virtual machines on a LUN that is running out of space due to thin provisioning over-commit. This is the “secret” fourth primitive that wasn’t officially acknowledged until vSphere 5 but apparently existed before. In vSphere 5, this works for both block and NFS storage. Signals are sent using SCSI “Check Condition” operations.

VAAI Commands in vSphere 4.1

esxcli corestorage device list

esxcli vaai device list

esxcli corestorage plugin list

![]()

![VENDORS[1] VENDORS[1]](http://www.modelcar.hk/wp-content/uploads/2011/12/VENDORS1.jpg)